-

Notifications

You must be signed in to change notification settings - Fork 17

mlflow_logger_v1 - Adding option to log last model #13

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: main

Are you sure you want to change the base?

Changes from all commits

File filter

Filter by extension

Conversations

Jump to

Diff view

Diff view

There are no files selected for viewing

| Original file line number | Diff line number | Diff line change | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

@@ -11,6 +11,13 @@ | |||||||||||||||

| from spacy.training.loggers import console_logger | ||||||||||||||||

|

|

||||||||||||||||

|

|

||||||||||||||||

| class ModelDir: | ||||||||||||||||

| def __init__(self) -> None: | ||||||||||||||||

| self.path = None | ||||||||||||||||

|

|

||||||||||||||||

| def update(self, path: str) -> None: | ||||||||||||||||

| self.path = path | ||||||||||||||||

|

|

||||||||||||||||

| # entry point: spacy.MLflowLogger.v1 | ||||||||||||||||

| def mlflow_logger_v1( | ||||||||||||||||

| run_id: Optional[str] = None, | ||||||||||||||||

|

|

@@ -19,6 +26,7 @@ def mlflow_logger_v1( | |||||||||||||||

| nested: bool = False, | ||||||||||||||||

| tags: Optional[Dict[str, Any]] = None, | ||||||||||||||||

| remove_config_values: List[str] = [], | ||||||||||||||||

| log_latest_dir: bool = True, | ||||||||||||||||

| ): | ||||||||||||||||

| try: | ||||||||||||||||

| import mlflow | ||||||||||||||||

|

|

@@ -33,7 +41,7 @@ def mlflow_logger_v1( | |||||||||||||||

| ) | ||||||||||||||||

|

|

||||||||||||||||

| console = console_logger(progress_bar=False) | ||||||||||||||||

|

|

||||||||||||||||

| def setup_logger( | ||||||||||||||||

| nlp: "Language", stdout: IO = sys.stdout, stderr: IO = sys.stderr | ||||||||||||||||

| ) -> Tuple[Callable[[Dict[str, Any]], None], Callable[[], None]]: | ||||||||||||||||

|

|

@@ -58,6 +66,15 @@ def setup_logger( | |||||||||||||||

| mlflow.log_params({k.replace("@", ""): v for k, v in batch}) | ||||||||||||||||

|

|

||||||||||||||||

| console_log_step, console_finalize = console(nlp, stdout, stderr) | ||||||||||||||||

|

|

||||||||||||||||

| if log_latest_dir: | ||||||||||||||||

| latest_model = ModelDir() | ||||||||||||||||

|

|

||||||||||||||||

| def log_model(path, name): | ||||||||||||||||

| mlflow.log_artifacts( | ||||||||||||||||

| path, | ||||||||||||||||

| name | ||||||||||||||||

| ) | ||||||||||||||||

|

Comment on lines

+73

to

+77

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. Similar: since this is just a wrapper without added functionality, let's use |

||||||||||||||||

|

|

||||||||||||||||

| def log_step(info: Optional[Dict[str, Any]]): | ||||||||||||||||

| console_log_step(info) | ||||||||||||||||

|

|

@@ -66,23 +83,34 @@ def log_step(info: Optional[Dict[str, Any]]): | |||||||||||||||

| other_scores = info["other_scores"] | ||||||||||||||||

| losses = info["losses"] | ||||||||||||||||

| output_path = info.get("output_path", None) | ||||||||||||||||

| if log_latest_dir: | ||||||||||||||||

| latest_model.update(output_path) | ||||||||||||||||

|

|

||||||||||||||||

| if score is not None: | ||||||||||||||||

| mlflow.log_metric("score", score) | ||||||||||||||||

| mlflow.log_metric("score", score, info["step"]) | ||||||||||||||||

| if losses: | ||||||||||||||||

| mlflow.log_metrics({f"loss_{k}": v for k, v in losses.items()}) | ||||||||||||||||

| mlflow.log_metrics({f"loss_{k}": v for k, v in losses.items()}, info["step"]) | ||||||||||||||||

| if isinstance(other_scores, dict): | ||||||||||||||||

| mlflow.log_metrics( | ||||||||||||||||

| { | ||||||||||||||||

| k: v | ||||||||||||||||

| for k, v in util.dict_to_dot(other_scores).items() | ||||||||||||||||

| if isinstance(v, float) or isinstance(v, int) | ||||||||||||||||

| } | ||||||||||||||||

| }, | ||||||||||||||||

| info["step"] | ||||||||||||||||

| ) | ||||||||||||||||

| if output_path and score == max(info["checkpoints"])[0]: | ||||||||||||||||

| nlp = load(output_path) | ||||||||||||||||

| mlflow.spacy.log_model(nlp, "best") | ||||||||||||||||

| log_model(output_path, 'model_best') | ||||||||||||||||

|

Comment on lines

104

to

+105

Contributor

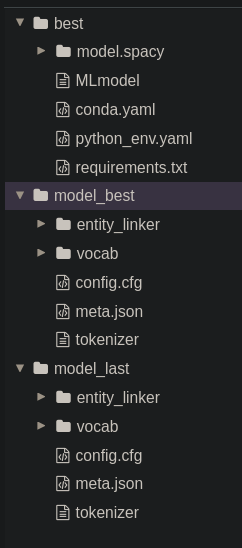

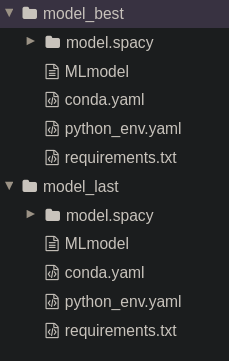

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more. It irks me that now we have three artifacts -

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more.

Hm, I ran this with def finalize() -> None:

if log_latest_dir:

mlflow.spacy.log_model(nlp, "model_last")now and this seemed to work (see image). What's your outcome? |

||||||||||||||||

|

|

||||||||||||||||

|

|

||||||||||||||||

| def finalize() -> None: | ||||||||||||||||

|

|

||||||||||||||||

| if log_latest_dir: | ||||||||||||||||

| log_model(latest_model.path, 'model_last') | ||||||||||||||||

|

|

||||||||||||||||

| print('End run') | ||||||||||||||||

|

Comment on lines

+109

to

+113

Contributor

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more.

Suggested change

|

||||||||||||||||

| console_finalize() | ||||||||||||||||

| mlflow.end_run() | ||||||||||||||||

|

|

||||||||||||||||

|

|

||||||||||||||||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we can ditch

ModelDir- let's use a simple string for the latest model path (latest_model_diror similar).