mlflow_logger_v1 - Adding option to log last model#13

mlflow_logger_v1 - Adding option to log last model#13adin786 wants to merge 2 commits intoexplosion:mainfrom

Conversation

|

Just to add, I probably did an overkill/hacky thing by making a class just to persist the model path for later uploading. Would be cleaner if I just used I'll wait for feedback before messing with this. The point is, just like the wandb logger I just want to pass the model path forward so I can call |

|

Hi, thanks for your contribution! We'll review the code and get back to you :-) |

rmitsch

left a comment

rmitsch

left a comment

There was a problem hiding this comment.

Thanks for your contribution, @adin786! Sorry it took me so long to review this.

Only some minor comments - and the issue with not being able to log artifacts with mlflow.spacy.log_model() outside of log_step() I'll look into.

| class ModelDir: | ||

| def __init__(self) -> None: | ||

| self.path = None | ||

|

|

||

| def update(self, path: str) -> None: | ||

| self.path = path | ||

|

|

There was a problem hiding this comment.

I think we can ditch ModelDir - let's use a simple string for the latest model path (latest_model_dir or similar).

| def log_model(path, name): | ||

| mlflow.log_artifacts( | ||

| path, | ||

| name | ||

| ) |

There was a problem hiding this comment.

Similar: since this is just a wrapper without added functionality, let's use mlflow.log_artifacts() directly.

| mlflow.spacy.log_model(nlp, "best") | ||

| log_model(output_path, 'model_best') |

There was a problem hiding this comment.

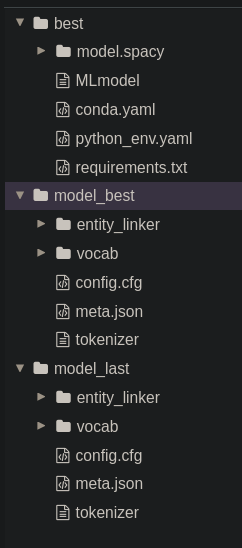

It irks me that now we have three artifacts - best, model_best and model_last. Ideally I'd like to have best and last, both the complete set of associated data (like best has, see screenshot). Just for the sake of consistency. If that doesn't work out due to the issues you described, I guess this is fine. I'll look into this.

There was a problem hiding this comment.

Note: I ended up using mlflow.log_artifact() rather than mlflow.spacy.log_model() because when I use the latter method outside of the log_step() function it looks like spacy just aborts the process abruptly, without letting the mlflow.spacy function do its thing.

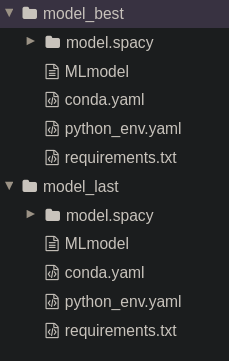

Hm, I ran this with

def finalize() -> None:

if log_latest_dir:

mlflow.spacy.log_model(nlp, "model_last")now and this seemed to work (see image). What's your outcome?

|

|

||

| if log_latest_dir: | ||

| log_model(latest_model.path, 'model_last') | ||

|

|

||

| print('End run') |

There was a problem hiding this comment.

| if log_latest_dir: | |

| log_model(latest_model.path, 'model_last') | |

| print('End run') | |

| if log_latest_dir: | |

| log_model(latest_model.path, 'model_last') |

I noticed the mlflow logger only seems to log the "best" model. This is a bit confusing as the metrics summary mlflow displays, gives the results for the "last" model.

Anyway the wandb logger and the clearml logger both look like they include some functionaliity to choose which model folder to track (or both). So I added functionality to log the "last" model with a boolean option in the

[training.logger]config. I thought Id stick to a similar pattern as the one implemented in the wandb logger (which handles this aspect neatly).Note: I ended up using

mlflow.log_artifact()rather thanmlflow.spacy.log_model()because when I use the latter method outside of thelog_step()function it looks like spacy just aborts the process abruptly, without letting the mlflow.spacy function do its thing. It works if I put it in the log_step() function but I wanted to just trigger an upload at the end... otherwise we'd seriously slow down the training process by forcing a model upload every training step. Perhaps I could use some feedback on this partSeparately, I also added the "step" value to each

mlflow.log_metrics()call as this just makes the mlflow plot visualisations a bit nicer if you want to view it by step rather than by time on the x axis.