-

Notifications

You must be signed in to change notification settings - Fork 0

As a user, I can see the summary result #9

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: feature/scrap-google-result

Are you sure you want to change the base?

Conversation

| end | ||

|

|

||

| defmodule GoogleCrawler.Google.Scrapper do | ||

| alias GoogleCrawler.Google.ScrapperResult |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Where is ScrapperResult defined?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@olivierobert In this file above here 😆 ⬆️

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Oh I see. Not sure about having more than one module per file though 🤔

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Agree 👍 I think it's better to separate it 🤔

236cd35 to

007238c

Compare

| @@ -1,12 +1,74 @@ | |||

| defmodule GoogleCrawler.Google.Scrapper do | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

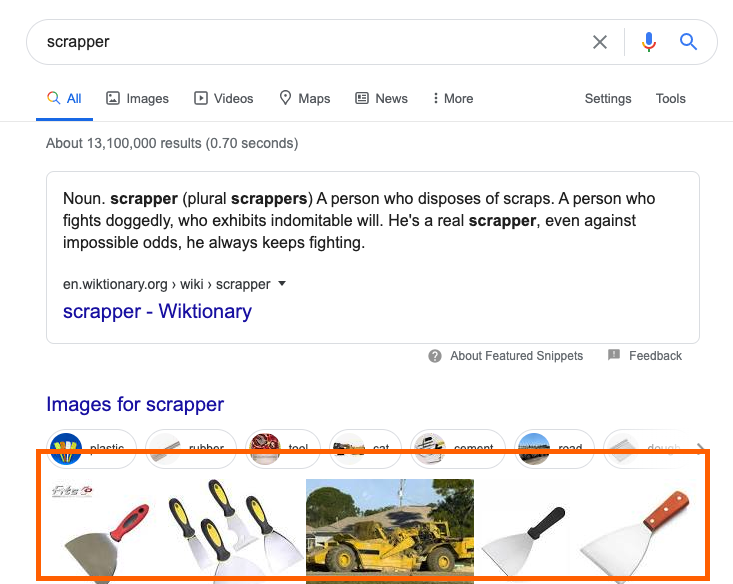

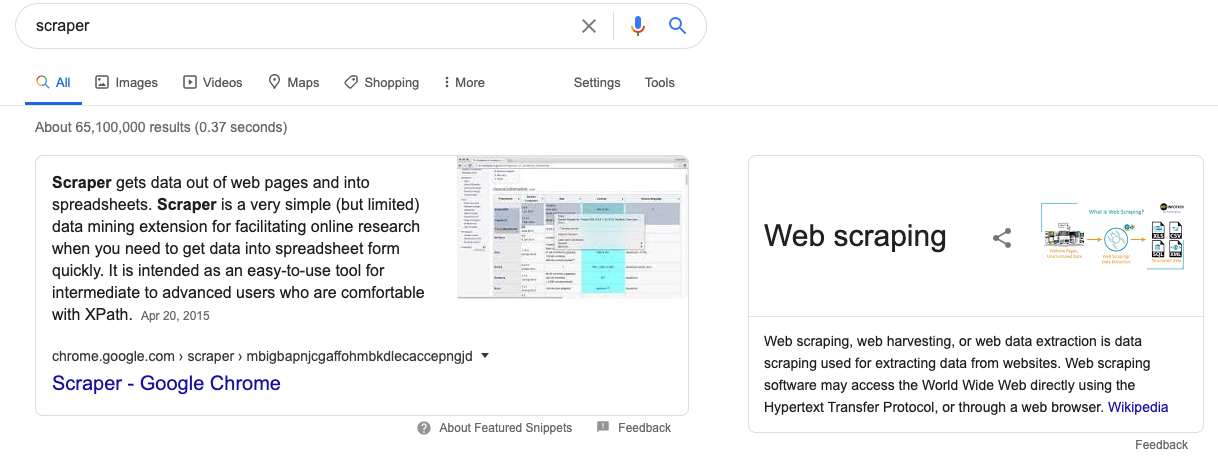

Wait, which one is correct 😂?? So It's scraper, right? Now I'm confused 😲

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, scraper (the one below). A scrapper is a tool to scrap things off, not related to web scraping.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'll fix the name 😂 👍

2b145dc to

26dbb21

Compare

26dbb21 to

4690bfb

Compare

9119f50 to

d5d2274

Compare

| @@ -1,4 +1,9 @@ | |||

| defmodule GoogleCrawler.SearchKeywordWorker do | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I am really not confident about this genserver 😂

I think it's because

-

The state is quite complex I think 🤔 💭 - Now it stores a map of

%{task_ref -> {%Keyword{}, retry_count}}. So I don't know if it is hard to read or not -

I am not sure about the error handling part. There are 2 cases now

- When the task is failed -> This handles by

handle_info({:DOWN}, ...) - When something is wrong when it insert the record -> This also needs to retry, so I extracted the retry logic out to a new function. It looks a bit weird.

Main problem is I'm not sure if it should be like this or not. Is it the right way to do it or not. Probably I need to learn more about the Genserver 😵

What happened

✅ Store the keyword search result

✅ Display the result on the keyword page

Insight

Add a new table links

User-(has many)->Keywords-(has many)->LinksLink store the url result and the property if that url is an AdWords or not and where it appears on the page (Could be top or bottom)

Proof Of Work