-

Notifications

You must be signed in to change notification settings - Fork 0

As a user, I can see my keyword raw result #8

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: feature/create-keyword

Are you sure you want to change the base?

Conversation

2218905 to

4f85743

Compare

6f8f71b to

e3ebe3c

Compare

| {:reply, :ok, new_state} | ||

| end | ||

|

|

||

| def handle_info({ref, result}, state) do |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

How/when does handle_info gets called? 🤔 start_task is started without any links so how?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For this one,

it happens when we start the task with Task.Supervisor.async_nolink below. it will send the message with task reference and the result back here.

But if the task is failed, it will trigger the handle_info({:DOWN, ref, :process, _pid, _reason}, state) below.

More info:

https://hexdocs.pm/elixir/Task.Supervisor.html#async_nolink/3

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I understood more by seeing the actual code example.

At first, I was confused as I thought that the supervisor would be notified, not the Genserver. For the supervisor to be notified, then it requires to use start_child.

| @@ -0,0 +1,84 @@ | |||

| defmodule GoogleCrawler.SearchKeywordWorker do | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

But if this process dies then all state would be gone, right?

When restarting should it then reads from the database to pick up all keyword remaining to be scraped? 🤔

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yep true. I haven't think about this case 😂

bbcff27 to

2d1ea89

Compare

2f6e4fc to

0648d54

Compare

0648d54 to

9b3fd44

Compare

| end | ||

|

|

||

| defp google_api_client do | ||

| Application.get_env(:google_crawler, :google_api_client) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I noticed that you used the same technic to inject the right module based on the environment (as per this blog post I shared). I even feel that this is something we could bring to Ruby projects

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Another interesting ideas -> https://medium.com/flatiron-labs/rolling-your-own-mock-server-for-testing-in-elixir-2cdb5ccdd1a0 🤔 This one is to running a server in the test env.

| assert %{^task_ref => {keyword, 0}} = SearchKeywordWorker.get_state() | ||

| assert Search.get_keyword(keyword.id).status == :in_progress | ||

|

|

||

| :timer.sleep(1000) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

How about adding the sleep in the Google API Client Mock to simulate delays?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think adding the delay to the client, we will need to put here also because we need to wait for the task to complete. (api is called in the task)

I found there is another way that we can monitor if the task is actually finish -> https://stackoverflow.com/questions/28745193/testing-asynchronous-code-in-elixir/28746505#28746505

Which I can do something like

task = SearchKeywordWorker.search(keyword.id)

ref = Process.monitor(task.pid)

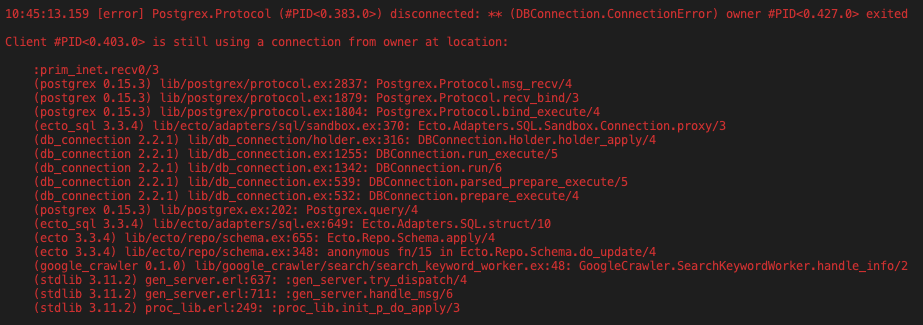

assert_receive {:DOWN, ^ref, :process, _, :normal}, 500But because even if the task is completed, I do some db update in the genserver handle_info and I encounters the error

I'm not completely sure what it is but maybe they're trying to update(in the genserver) and get the record(in the assertion). So when I use that, I added that I had to put antoher sleep before the assertion to make it work

ref = Process.monitor(task.pid)

assert_receive {:DOWN, ^ref, :process, _, :normal}, 500

:timer.sleep(100) 🤔 💭

| @@ -0,0 +1,22 @@ | |||

| defmodule GoogleCrawler.Google.ApiClientBehaviour do | |||

| @callback search(keyword :: String.t()) :: {:ok, String.t()} | {:error, String.t()} | |||

| end | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It's actually a good idea to use a behaviour so make sure that both the actual and mock modules have the same implementation.

What happened

✅ Setup sass

✅ Run the background job to get the result from google search - experiment with GenServer

✅ Update the keyword status and result

Insight

For this one, I am trying to setup a background job to search. A GenServer + Task Supervisor is setup to handle that.

There are still some known problems that I need to investigate and fix later. 😓

Proof Of Work

After upload the keyword, it shows the status on the list

The status is updated after the keyword search is done

User can click on the

view_resultto view the search result