-

Notifications

You must be signed in to change notification settings - Fork 1

MPI #13

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: parallel

Are you sure you want to change the base?

MPI #13

Conversation

|

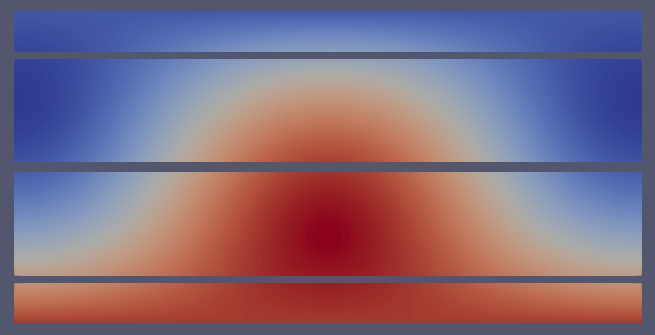

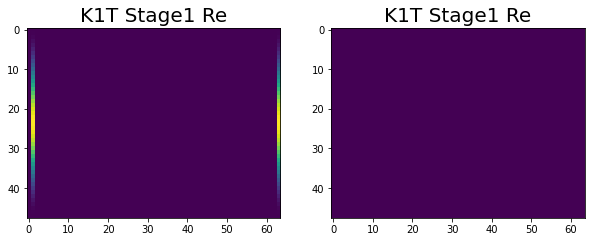

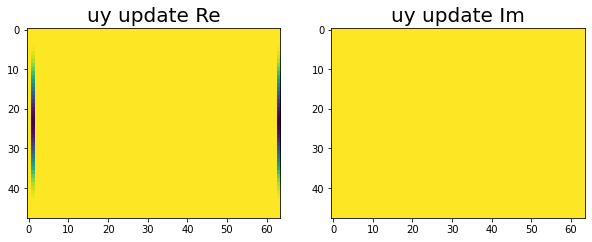

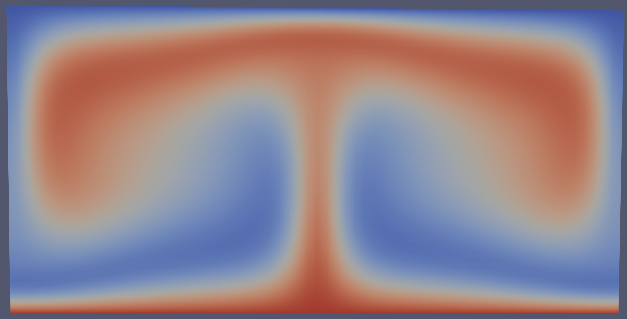

Currently, the initialization of the mesh on four processors seems close to done. I created new files for the MPI time loop and time integration, because it is going to be a lot of restructuring the code. Current output shows initialization: From the vtk outputs we can see that the grid is split among the four processors. |

|

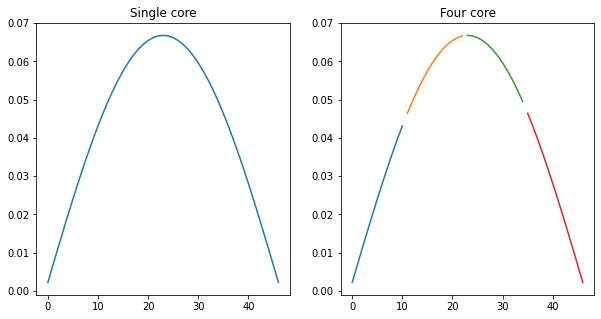

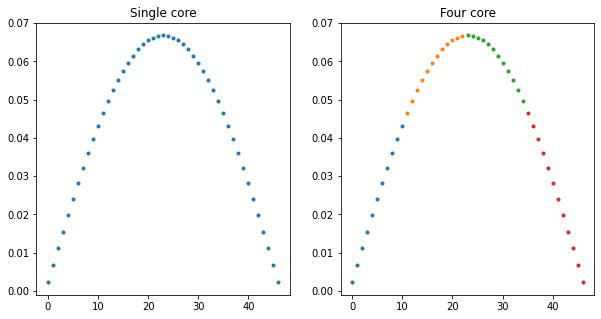

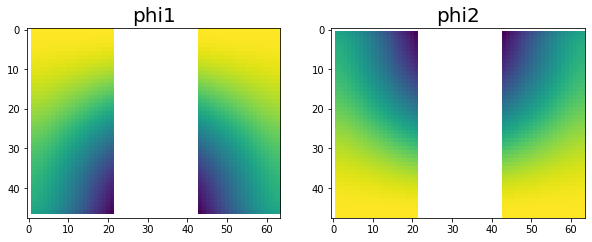

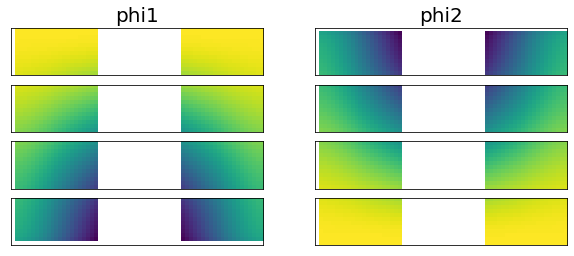

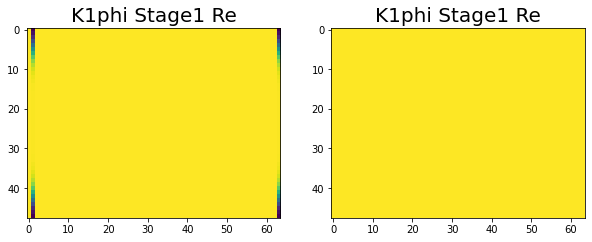

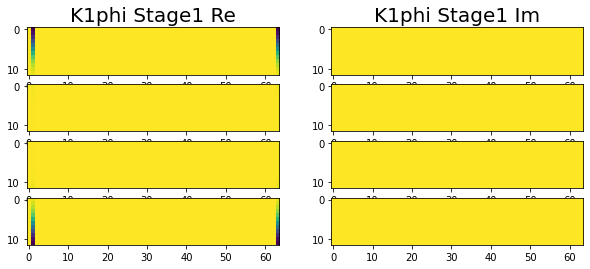

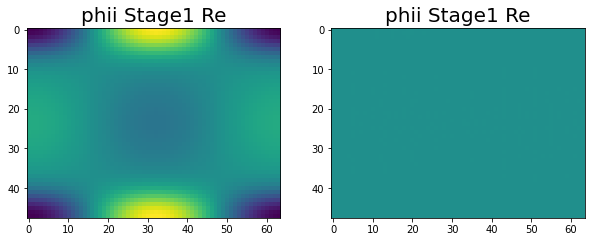

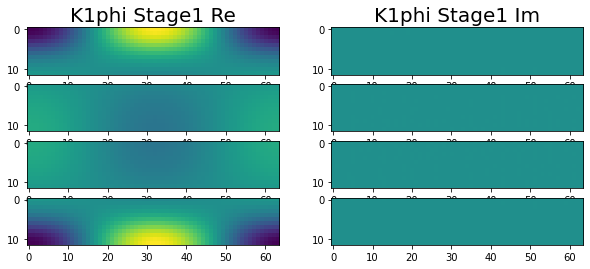

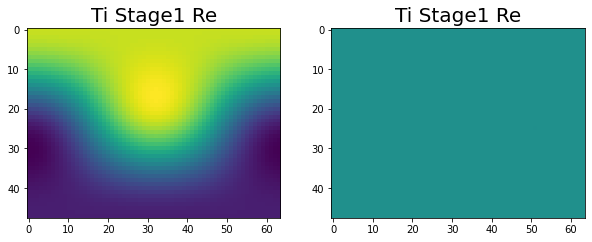

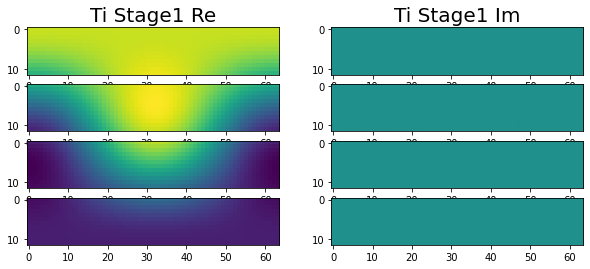

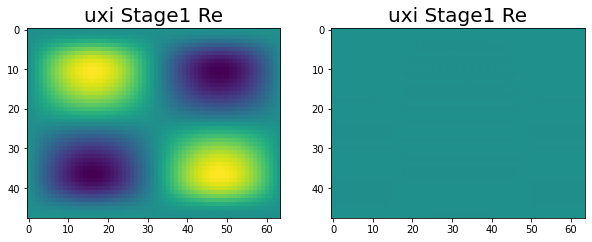

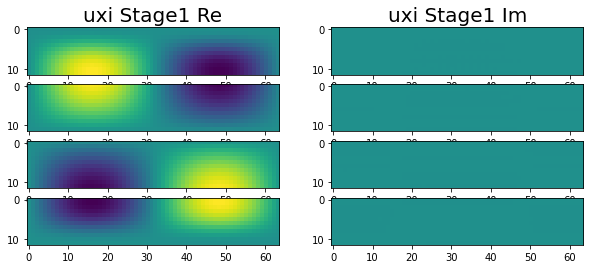

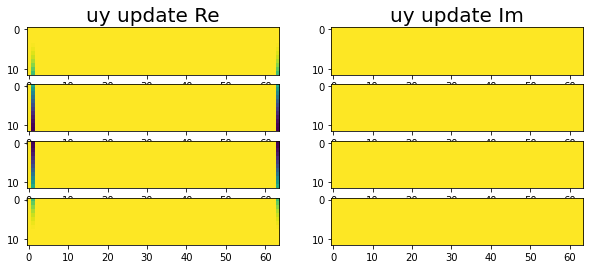

Ok, after a bit of a battle, I have the phi1 and phi2 matrices initialized on each node. This was particularly challenging because the initialization requires a linear solve of a tridiagonal system which required all of the g1,g2,g3 values. To address this I have each worker node send their portion of the g vectors to the main node, where the solve is completed. Then each column of the phi1 and phi2 matrices is sent back to the worker nodes. I confirmed the correctness of the resulting matrices. Visually, the plots below represent the data split. |

|

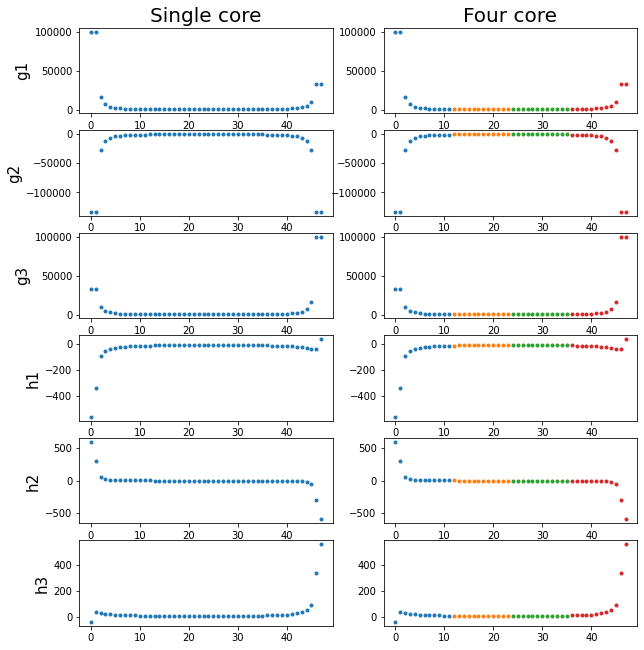

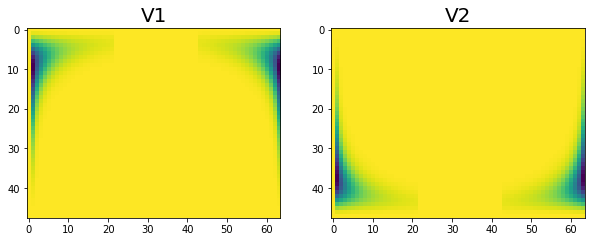

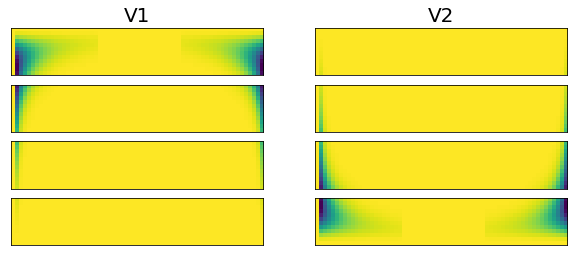

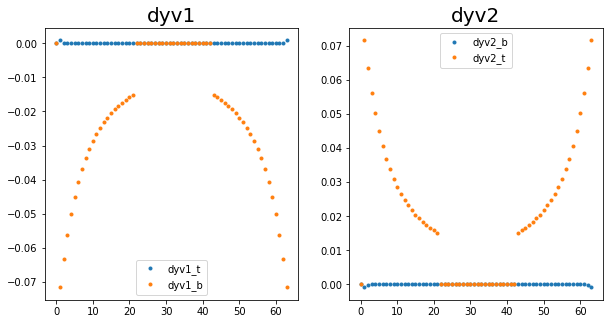

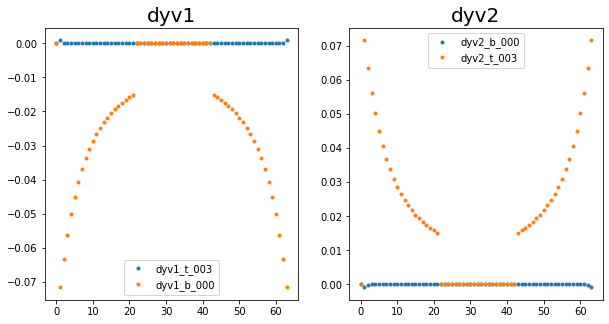

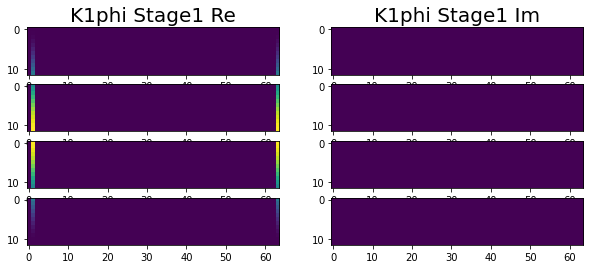

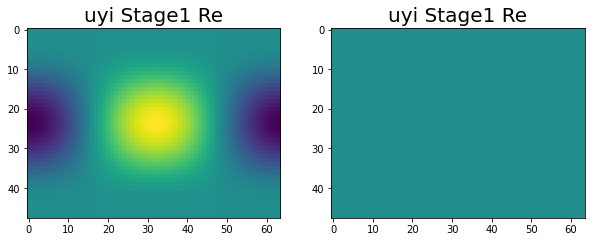

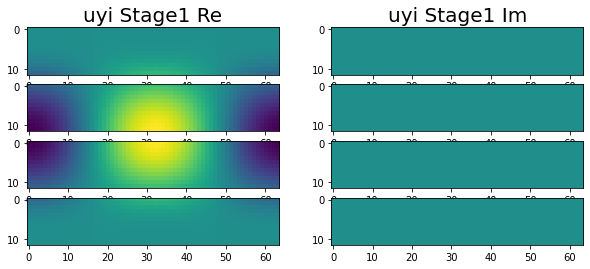

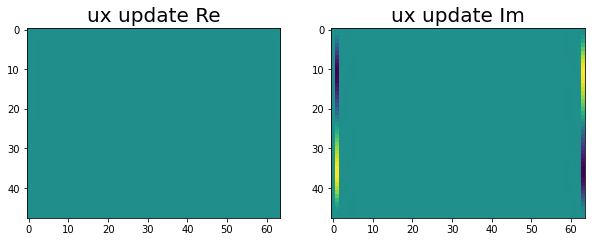

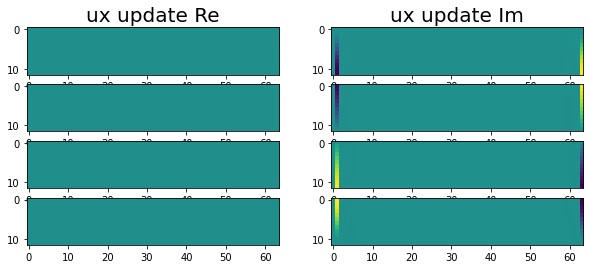

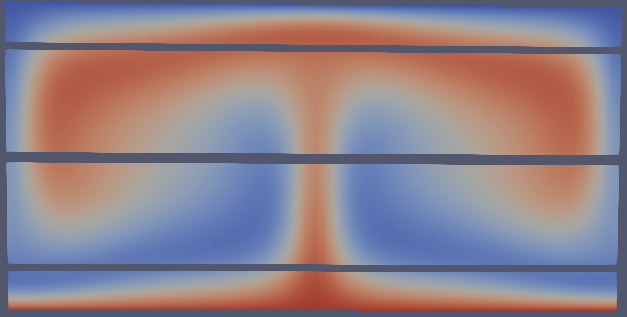

Added support for the wall derivatives. Since these are calculated at the top and bottom of the grid, the bottom derivatives are held by the first processor, and the top derivatives are held by the last processor. This is simple enough to implement. The figures for the 4 derivatives on a single node vs split across the top and bottom node are below. |

No description provided.