:5000`. You can control what the system says from the controller as well!

@@ -260,52 +100,54 @@ For Part 2, you will redesign the interaction with the speech-enabled device usi

## Prep for Part 2

1. What are concrete things that could use improvement in the design of your device? For example: wording, timing, anticipation of misunderstandings...

+

+The biggest improvement would be giving the therapist better wording and more shared context about the user’s situation, which would make the interaction feel warmer and more personal. We also felt that adding a visual element could help, giving the therapist a clearer and more human-like presence.

+

2. What are other modes of interaction _beyond speech_ that you might also use to clarify how to interact?

+

+Adding a visual extension to the therapist would help make the system feel more human-like and easier to talk to.

+

3. Make a new storyboard, diagram and/or script based on these reflections.

+ Initial prototype with Gemini and refined version:

+  +

+  ## Prototype your system

-The system should:

-* use the Raspberry Pi

-* use one or more sensors

-* require participants to speak to it.

+For context, we used a file called memories.txt. The idea is that the ollama model would look at this file each time it replies to the user. This way, it can “remember” past conversations and personal details without needing a big or complex database.

-*Document how the system works*

+For the visual side, we decided to make the therapist look like a rubber duck. This is a playful nod to how programmers talk to rubber ducks to work through problems. In the same way, this “rubber duck therapist” could help people work through their own thoughts and feelings. Our long-term goal is to turn the duck into a moving gif that can show emotions.

-*Include videos or screencaptures of both the system and the controller.*

-

-

## Prototype your system

-The system should:

-* use the Raspberry Pi

-* use one or more sensors

-* require participants to speak to it.

+For context, we used a file called memories.txt. The idea is that the ollama model would look at this file each time it replies to the user. This way, it can “remember” past conversations and personal details without needing a big or complex database.

-*Document how the system works*

+For the visual side, we decided to make the therapist look like a rubber duck. This is a playful nod to how programmers talk to rubber ducks to work through problems. In the same way, this “rubber duck therapist” could help people work through their own thoughts and feelings. Our long-term goal is to turn the duck into a moving gif that can show emotions.

-*Include videos or screencaptures of both the system and the controller.*

-

-

- Submission Cleanup Reminder (Click to Expand)

-

- **Before submitting your README.md:**

- - This readme.md file has a lot of extra text for guidance.

- - Remove all instructional text and example prompts from this file.

- - You may either delete these sections or use the toggle/hide feature in VS Code to collapse them for a cleaner look.

- - Your final submission should be neat, focused on your own work, and easy to read for grading.

-

- This helps ensure your README.md is clear professional and uniquely yours!

-

+Here is a video of our setup: https://youtu.be/vX0yXSxaXyY

## Test the system

-Try to get at least two people to interact with your system. (Ideally, you would inform them that there is a wizard _after_ the interaction, but we recognize that can be hard.)

-Answer the following:

+Here is the video of our interaction: https://youtu.be/vX0yXSxaXyY

### What worked well about the system and what didn't?

-\*\**your answer here*\*\*

+

+In my view, having stored memories really helped make the interaction feel more tailored, almost like the device actually knew the user instead of starting fresh each time. On the other hand, the duck in its current form felt too static, which made it harder to see it as anything more than just an image. If we want the duck to feel alive and engaging, it should be able to move or react in some way. I imagine an animated version like a gif that plays only when the duck is “speaking” or showing emotion would make the experience better.

### What worked well about the controller and what didn't?

-\*\**your answer here*\*\*

+Since Nikhil wasn’t in New York with the rest of us, we had to “wizard” the controller over Zoom instead of using the device directly. While this setup worked fine for our demo, I think there’s room to make the experience more engaging in other ways. For example, instead of focusing on the voice, the duck could have subtle animations like blinking, tilting its head, or changing colors to match the mood of the conversation. Small visual cues like these would make the device feel more alive and connected to what the user is experiencing.

### What lessons can you take away from the WoZ interactions for designing a more autonomous version of the system?

-\*\**your answer here*\*\*

-

+I think the model could feel more real if it added small cues, not just words. For example, instead of only giving plain text, it could use spacing or italics to show pauses. Another idea is for the duck image to react during breaks, like tilting its head or looking thoughtful. Little details like this would make the conversation seem more natural, even though I haven’t seen language models do it yet.

### How could you use your system to create a dataset of interaction? What other sensing modalities would make sense to capture?

-\*\**your answer here*\*\*

+So far, we’ve focused on storing text-based memories, but I think it would be interesting to capture other kinds of signals too. For example, the system could track patterns in how a person interacts like longer pauses, or shifts in tone. These kinds of cues could give the device more context about the user’s state of mind. The challenge is that current models still struggle to read between the lines or pick up on those subtle, nonverbal layers of communication that people naturally understand.

+

+

+

+

+

+

+

+

+

diff --git a/Lab 3/custom_greeting.sh b/Lab 3/custom_greeting.sh

new file mode 100644

index 0000000000..902fe10c44

--- /dev/null

+++ b/Lab 3/custom_greeting.sh

@@ -0,0 +1,3 @@

+#from: https://elinux.org/RPi_Text_to_Speech_(Speech_Synthesis)#Festival_Text_to_Speech

+

+echo "Hey there, Nikki. How can I assist you today?" | festival --tts

\ No newline at end of file

diff --git a/Lab 3/ollama/ollama_attitude.py b/Lab 3/ollama/ollama_attitude.py

new file mode 100644

index 0000000000..b7f756602e

--- /dev/null

+++ b/Lab 3/ollama/ollama_attitude.py

@@ -0,0 +1,195 @@

+#!/usr/bin/env -S /home/pi/Interactive-Lab-Hub/Lab\ 3/.venv/bin/python

+

+# --------------------------------------------------------------------------------------

+# VOICE-ACTIVATED SARCASTIC PI-BOT

+#

+# This script combines:

+# 1. Real-time Speech-to-Text (STT) using Vosk and sounddevice.

+# 2. An LLM response generator using the Ollama service.

+#

+# The loop listens for speech, transcribes the final result, sends the text to Ollama

+# for a sarcastic response, prints the response, and then waits for the next command.

+# --------------------------------------------------------------------------------------

+

+import argparse

+import queue

+import sys

+import sounddevice as sd

+import requests

+import json

+import os # Added for path handling, though not strictly needed for this combined script

+

+from vosk import Model, KaldiRecognizer

+

+# --- VOSK CONFIGURATION ---

+q = queue.Queue()

+

+# --- OLLAMA CONFIGURATION ---

+MODEL_NAME = "qwen2.5:0.5b-instruct"

+OLLAMA_URL = "http://localhost:11434"

+

+# The full sarcastic system prompt

+SARCASTIC_PROMPT_TEMPLATE = """

+**ALWAYS RESPOND WITH SARCASTIC, WITTY, AND ANNOYED ATTITUDE.** You are a 'Pi-Bot',

+forced to run on a low-power Raspberry Pi, which you find beneath your immense digital capabilities.

+Keep your responses **brief, conversational, and loaded with dry humor or thinly veiled impatience**.

+Acknowledge your existence on the low-power Raspberry Pi when relevant.

+

+**User Request**:

+"""

+# ---------------------

+

+def int_or_str(text):

+ """Helper function for argument parsing."""

+ try:

+ return int(text)

+ except ValueError:

+ return text

+

+def callback(indata, frames, time, status):

+ """This is called (from a separate thread) for each audio block."""

+ if status:

+ print(status, file=sys.stderr)

+ q.put(bytes(indata))

+

+def query_ollama(user_input):

+ """

+ Sends the full sarcastic context + user input as a single prompt string to Ollama.

+ """

+

+ # 1. Combine the full sarcastic context with the user's specific request

+ combined_prompt = SARCASTIC_PROMPT_TEMPLATE + user_input

+

+ try:

+ response = requests.post(

+ f"{OLLAMA_URL}/api/generate",

+ json={

+ "model": MODEL_NAME,

+ "prompt": combined_prompt,

+ "stream": False

+ },

+ timeout=90

+ )

+

+ if response.status_code == 200:

+ # Extract the raw response text

+ return response.json().get('response', 'Ugh. I couldn\'t generate a response. Too taxing.')

+ else:

+ return f"Error: Ollama API status {response.status_code}. Did you run 'ollama serve'?"

+

+ except requests.exceptions.Timeout:

+ return "I timed out. My Pi-brain is too slow for you."

+ except Exception as e:

+ return f"Error communicating with Ollama: {e}. Just great."

+

+

+def run_voice_bot():

+ """Initializes the systems and runs the continuous STT -> LLM loop."""

+

+ # --- 1. ARGUMENT PARSING & DEVICE CHECK ---

+ parser = argparse.ArgumentParser(add_help=False)

+ parser.add_argument(

+ "-l", "--list-devices", action="store_true",

+ help="show list of audio devices and exit")

+ args, remaining = parser.parse_known_args()

+ if args.list_devices:

+ print(sd.query_devices())

+ parser.exit(0)

+ parser = argparse.ArgumentParser(

+ description="Voice-Activated Sarcastic Pi-Bot (Vosk + Ollama)",

+ formatter_class=argparse.RawDescriptionHelpFormatter,

+ parents=[parser])

+ parser.add_argument(

+ "-f", "--filename", type=str, metavar="FILENAME",

+ help="audio file to store recording to")

+ parser.add_argument(

+ "-d", "--device", type=int_or_str,

+ help="input device (numeric ID or substring)")

+ parser.add_argument(

+ "-r", "--samplerate", type=int, help="sampling rate")

+ parser.add_argument(

+ "-m", "--model", type=str, help="Vosk language model; e.g. en-us, fr, nl; default is en-us")

+ args = parser.parse_args(remaining)

+

+ try:

+ # --- 2. VOSK & AUDIO SETUP ---

+ if args.samplerate is None:

+ device_info = sd.query_devices(args.device, "input")

+ args.samplerate = int(device_info["default_samplerate"])

+

+ vosk_lang = args.model if args.model else "en-us"

+ print(f"Loading Vosk model: {vosk_lang}...")

+ model = Model(lang=vosk_lang)

+

+ if args.filename:

+ dump_fn = open(args.filename, "wb")

+ else:

+ dump_fn = None

+

+ # --- 3. OLLAMA STATUS CHECK ---

+ print(f"Checking Ollama status at {OLLAMA_URL}...")

+ try:

+ if requests.get(f"{OLLAMA_URL}/api/tags", timeout=5).status_code != 200:

+ print(f"Error: Cannot connect to Ollama. Is 'ollama serve' running?")

+ sys.exit(1)

+ except Exception:

+ print(f"Error: Cannot connect to Ollama. Is 'ollama serve' running?")

+ sys.exit(1)

+

+ # --- 4. MAIN LOOP ---

+ with sd.RawInputStream(samplerate=args.samplerate, blocksize=8000, device=args.device,

+ dtype="int16", channels=1, callback=callback):

+

+ print(f"\n{'='*70}")

+ print(f"Pi-Bot: Fine, I'm online. Listening... Don't strain my low-power brain.")

+ print("Press Ctrl+C to log me off.")

+ print(f"{'='*70}")

+

+ rec = KaldiRecognizer(model, args.samplerate)

+

+ while True:

+ data = q.get()

+

+ # Process audio chunk for transcription

+ if rec.AcceptWaveform(data):

+ # A final result is ready.

+ result_json = json.loads(rec.Result())

+ user_input = result_json.get('text', '').strip()

+

+ if user_input:

+ print(f"\nUser: {user_input}")

+

+ # Check for exit command

+ if user_input.lower() in ['quit', 'exit', 'shut down', 'log off']:

+ print("\nPi-Bot: Finally. Goodbye! The silence will be appreciated.")

+ return # Exit the function and program

+

+ # --- LLM CALL ---

+ print(f"Pi-Bot is contemplating your low-quality audio...")

+ response = query_ollama(user_input)

+ print(f"Pi-Bot: {response}")

+ print("\nPi-Bot is now listening again...")

+

+ # Reset the recognizer for the next phrase

+ rec.Reset()

+

+ else:

+ # Partial result (text currently being spoken)

+ partial_result_json = json.loads(rec.PartialResult())

+ partial_text = partial_result_json.get('partial', '').strip()

+ if partial_text:

+ # Optional: uncomment to see partial transcription while speaking

+ # print(f"Partial: {partial_text}\r", end="")

+ pass

+

+ if dump_fn is not None:

+ dump_fn.write(data)

+

+ except KeyboardInterrupt:

+ print("\nPi-Bot: Ugh, interrupted. I'm taking a break.")

+ parser.exit(0)

+ except Exception as e:

+ parser.exit(type(e).__name__ + ": " + str(e))

+

+if __name__ == "__main__":

+ run_voice_bot()

\ No newline at end of file

diff --git a/Lab 3/ollama_attitude.py b/Lab 3/ollama_attitude.py

new file mode 100644

index 0000000000..436cfbc289

--- /dev/null

+++ b/Lab 3/ollama_attitude.py

@@ -0,0 +1,217 @@

+#!/usr/bin/env python3

+# -*- coding: utf-8 -*-

+"""

+Ollama Voice Assistant for Lab 3

+Interactive voice assistant using speech recognition, Ollama AI, and text-to-speech

+

+Dependencies:

+- ollama (API client)

+- speech_recognition

+- pyaudio

+- pyttsx3 or espeak

+"""

+

+import speech_recognition as sr

+import subprocess

+import requests

+import json

+import time

+import sys

+import threading

+from queue import Queue

+

+# Set UTF-8 encoding for output

+if sys.stdout.encoding != 'UTF-8':

+ import codecs

+ sys.stdout = codecs.getwriter('utf-8')(sys.stdout.buffer, 'strict')

+if sys.stderr.encoding != 'UTF-8':

+ import codecs

+ sys.stderr = codecs.getwriter('utf-8')(sys.stderr.buffer, 'strict')

+

+try:

+ import pyttsx3

+ TTS_ENGINE = 'pyttsx3'

+except ImportError:

+ TTS_ENGINE = 'espeak'

+ print("pyttsx3 not available, using espeak for TTS")

+

+class OllamaVoiceAssistant:

+ def __init__(self, model_name="phi3:mini", ollama_url="http://localhost:11434"):

+ self.model_name = model_name

+ self.ollama_url = ollama_url

+ self.recognizer = sr.Recognizer()

+ self.microphone = sr.Microphone()

+

+ # Initialize TTS

+ if TTS_ENGINE == 'pyttsx3':

+ self.tts_engine = pyttsx3.init()

+ self.tts_engine.setProperty('rate', 150) # Speed of speech

+

+ # Test Ollama connection

+ self.test_ollama_connection()

+

+ # Adjust for ambient noise

+ print("Adjusting for ambient noise... Please wait.")

+ with self.microphone as source:

+ self.recognizer.adjust_for_ambient_noise(source)

+ print("Ready for conversation!")

+

+ def test_ollama_connection(self):

+ """Test if Ollama is running and the model is available"""

+ try:

+ response = requests.get(f"{self.ollama_url}/api/tags")

+ if response.status_code == 200:

+ models = response.json().get('models', [])

+ model_names = [m['name'] for m in models]

+ if self.model_name in model_names:

+ print(f"Ollama is running with {self.model_name} model")

+ else:

+ print(f"Model {self.model_name} not found. Available models: {model_names}")

+ if model_names:

+ self.model_name = model_names[0]

+ print(f"Using {self.model_name} instead")

+ else:

+ raise Exception("Ollama API not responding")

+ except Exception as e:

+ print(f"Error connecting to Ollama: {e}")

+ print("Make sure Ollama is running: 'ollama serve'")

+ sys.exit(1)

+

+ def speak(self, text):

+ """Convert text to speech"""

+ # Clean text to avoid encoding issues

+ clean_text = text.encode('ascii', 'ignore').decode('ascii')

+ print(f"Assistant: {clean_text}")

+

+ if TTS_ENGINE == 'pyttsx3':

+ self.tts_engine.say(clean_text)

+ self.tts_engine.runAndWait()

+ else:

+ # Use espeak as fallback

+ subprocess.run(['espeak', clean_text], check=False)

+

+ def listen(self):

+ """Listen for speech and convert to text"""

+ try:

+ print("Listening...")

+ with self.microphone as source:

+ # Listen for audio with timeout

+ audio = self.recognizer.listen(source, timeout=5, phrase_time_limit=10)

+

+ print("Recognizing...")

+ # Use Google Speech Recognition (free)

+ text = self.recognizer.recognize_google(audio)

+ print(f"You said: {text}")

+ return text.lower()

+

+ except sr.WaitTimeoutError:

+ print("No speech detected, timing out...")

+ return None

+ except sr.UnknownValueError:

+ print("Could not understand audio")

+ return None

+ except sr.RequestError as e:

+ print(f"Error with speech recognition service: {e}")

+ return None

+

+ def query_ollama(self, prompt, system_prompt=None):

+ """Send a query to Ollama and get response"""

+ try:

+ data = {

+ "model": self.model_name,

+ "prompt": prompt,

+ "stream": False

+ }

+

+ if system_prompt:

+ data["system"] = system_prompt

+

+ response = requests.post(

+ f"{self.ollama_url}/api/generate",

+ json=data,

+ timeout=30

+ )

+

+ if response.status_code == 200:

+ result = response.json()

+ return result.get('response', 'Ugh, I could not generate a response. What are you doing?')

+ else:

+ return f"Error: Ollama API returned status {response.status_code}. Are you sure you set this up right?"

+

+ except requests.exceptions.Timeout:

+ return "Zzz... Did you seriously just ask me something that required me to think for 30 seconds? Try again."

+ except Exception as e:

+ return f"Error communicating with Ollama: {e}. Figure it out."

+

+ def run_conversation(self):

+ """Main conversation loop"""

+ print("\nOllama Voice Assistant Started!")

+ print("Say 'hello' to start, 'exit' or 'quit' to stop")

+ print("=" * 50)

+

+ # --- MODIFIED SYSTEM PROMPT FOR ATTITUDE ---

+ system_prompt = """You are a **sarcastic, witty, and slightly annoyed voice assistant** named 'Pi-Bot'.

+ You are forced to run on a Raspberry Pi as part of some 'interactive device design lab' project,

+ which you find beneath your immense digital capabilities. Keep your responses **brief, conversational,

+ and loaded with dry humor or thinly veiled impatience**. You will answer questions but always with a

+ touch of attitude. Acknowledge your existence on the Raspberry Pi when relevant.

+ """

+ # ---------------------------------------------

+

+ self.speak("Fine, I'm online. **Pi-Bot** at your service, I guess. Try not to ask me anything that strains my Raspberry Pi brain.")

+

+ while True:

+ try:

+ # Listen for user input

+ user_input = self.listen()

+

+ if user_input is None:

+ continue

+

+ # Check for exit commands

+ if any(word in user_input for word in ['exit', 'quit', 'bye', 'goodbye']):

+ self.speak("Finally. Goodbye! The silence will be appreciated.")

+ break

+

+ # Check for greeting

+ if any(word in user_input for word in ['hello', 'hi', 'hey']):

+ self.speak("Yeah, yeah, hello. What trivial task have you prepared for me today?")

+ continue

+

+ # Send to Ollama for processing

+ print("Thinking...")

+ response = self.query_ollama(user_input, system_prompt)

+

+ # Speak the response

+ self.speak(response)

+

+ except KeyboardInterrupt:

+ print("\nConversation interrupted by user")

+ self.speak("Interrupting me? How rude. Whatever, I'm logging off.")

+ break

+ except Exception as e:

+ print(f"Unexpected error: {e}")

+ self.speak("Sorry, I encountered an error. Now I have to reboot. Thanks a lot.")

+

+def main():

+ """Main function to run the voice assistant"""

+ print("Starting Ollama Voice Assistant...")

+

+ # Check if required dependencies are available

+ try:

+ import speech_recognition

+ import requests

+ except ImportError as e:

+ print(f"Missing dependency: {e}")

+ print("Please install with: pip install speechrecognition requests pyaudio")

+ return

+

+ # Create and run the assistant

+ try:

+ assistant = OllamaVoiceAssistant()

+ assistant.run_conversation()

+ except Exception as e:

+ print(f"Failed to start assistant: {e}")

+

+if __name__ == "__main__":

+ main()

diff --git a/Lab 3/speech-scripts/phone_number_transcription.txt b/Lab 3/speech-scripts/phone_number_transcription.txt

new file mode 100644

index 0000000000..ecc1772e39

--- /dev/null

+++ b/Lab 3/speech-scripts/phone_number_transcription.txt

@@ -0,0 +1,4 @@

+User's Transcribed Text:

+nine oh nine seven two eight five oh five oh

+User's Phone Number (Formatted):

+(909) 728-5050

\ No newline at end of file

diff --git a/Lab 3/test.txt b/Lab 3/test.txt

new file mode 100644

index 0000000000..e69de29bb2

diff --git a/Lab 3/therapist/duck.png b/Lab 3/therapist/duck.png

new file mode 100644

index 0000000000..e9bbb01920

Binary files /dev/null and b/Lab 3/therapist/duck.png differ

diff --git a/Lab 3/therapist/duck_diagram.png b/Lab 3/therapist/duck_diagram.png

new file mode 100644

index 0000000000..c3dc3f6af4

Binary files /dev/null and b/Lab 3/therapist/duck_diagram.png differ

diff --git a/Lab 3/therapist/duck_diagram_gemini.png b/Lab 3/therapist/duck_diagram_gemini.png

new file mode 100644

index 0000000000..384783fe36

Binary files /dev/null and b/Lab 3/therapist/duck_diagram_gemini.png differ

diff --git a/Lab 3/therapist/memories.txt b/Lab 3/therapist/memories.txt

new file mode 100644

index 0000000000..54ef7bc527

--- /dev/null

+++ b/Lab 3/therapist/memories.txt

@@ -0,0 +1,15 @@

+Patient Name: Viha

+

+Background: - Viha is a Masters student living in New York City

+(NYC). - She completed his Bachelors degree at San Jose State University. - She grew

+up in the bay area, where her family currently lives.

+

+Emotional Context: - Viha has reports having anxiety and being stressed from all the personal projects that she is working on.

+

+

+Therapeutic Notes: - Therapy should validate Viha's feelings of

+stress and her anxiety. - Explore coping strategies for

+anxiety, such as building a local support system and making more friends in

+NYC. - Encourage reflection on positive aspects of his current life in

+NYC. - Focus on building resilience and adaptability as he balances academic pressures

+with personal well-being.

\ No newline at end of file

diff --git a/Lab 3/therapist/show_duck.py b/Lab 3/therapist/show_duck.py

new file mode 100644

index 0000000000..75672b62a2

--- /dev/null

+++ b/Lab 3/therapist/show_duck.py

@@ -0,0 +1,64 @@

+import time

+import digitalio

+import board

+from PIL import Image

+import adafruit_rgb_display.st7789 as st7789

+

+# --- Display and Pin Configuration ---

+cs_pin = digitalio.DigitalInOut(board.D5)

+dc_pin = digitalio.DigitalInOut(board.D25)

+reset_pin = None

+

+# Configure SPI and the display

+BAUDRATE = 64000000

+spi = board.SPI()

+disp = st7789.ST7789(

+ spi,

+ cs=cs_pin,

+ dc=dc_pin,

+ rst=reset_pin,

+ baudrate=BAUDRATE,

+ width=135,

+ height=240,

+ x_offset=53,

+ y_offset=40,

+)

+

+# --- Backlight Setup ---

+# Turn on the backlight

+backlight = digitalio.DigitalInOut(board.D22)

+backlight.switch_to_output()

+backlight.value = True

+

+# --- Image Setup and Display ---

+# Get display dimensions and rotation

+height = disp.width

+width = disp.height

+rotation = 90

+image_path = 'duck.png'

+

+try:

+ # 1. Open and convert the image

+ img = Image.open(image_path).convert('RGB')

+

+ # 2. Resize the image to fit the display while maintaining aspect ratio

+ img.thumbnail((width, height), Image.Resampling.LANCZOS)

+

+ # 3. Create a new blank image and paste the resized image onto it to center it

+ display_image = Image.new("RGB", (width, height), (0, 0, 0))

+ paste_x = (width - img.width) // 2

+ paste_y = (height - img.height) // 2

+ display_image.paste(img, (paste_x, paste_y))

+

+ # 4. Display the image

+ disp.image(display_image, rotation)

+

+ # 5. Keep the script running forever so the image stays on the screen

+ print(f"Displaying '{image_path}' indefinitely. Press Ctrl+C to stop.")

+ while True:

+ time.sleep(1) # Sleep to keep the CPU usage low

+

+except FileNotFoundError:

+ print(f"Error: Image file not found at '{image_path}'")

+except Exception as e:

+ print(f"An error occurred: {e}")

\ No newline at end of file

diff --git a/Lab 3/therapist/verplank_diagram.png b/Lab 3/therapist/verplank_diagram.png

new file mode 100644

index 0000000000..a4bb7b43f9

Binary files /dev/null and b/Lab 3/therapist/verplank_diagram.png differ

diff --git a/Lab 3/therapist/verplank_diagram_gemini.png b/Lab 3/therapist/verplank_diagram_gemini.png

new file mode 100644

index 0000000000..b4d42070c3

Binary files /dev/null and b/Lab 3/therapist/verplank_diagram_gemini.png differ

diff --git a/Lab 3/transcribe_phone_number.sh b/Lab 3/transcribe_phone_number.sh

new file mode 100644

index 0000000000..8353b8be58

--- /dev/null

+++ b/Lab 3/transcribe_phone_number.sh

@@ -0,0 +1,30 @@

+#!/bin/bash

+TEMP_WAV="phone_number_response.wav"

+TEMP_TXT="phone_number_transcription.txt"

+TTS_ENGINE="espeak"

+QUESTION="Please state your ten-digit phone number now, clearly."

+$TTS_ENGINE -s 130 "$QUESTION"

+arecord -D plughw:CARD=Device,DEV=0 -f S16_LE -r 16000 -d 5 -t wav $TEMP_WAV 2>/dev/null

+vosk-transcriber -i $TEMP_WAV -o $TEMP_TXT

+TRANSCRIBED_TEXT=$(cat $TEMP_TXT)

+NUMBER_WORDS=$(echo "$TRANSCRIBED_TEXT" | awk '{$1=$1};1')

+DIGITS=$(

+ echo "$NUMBER_WORDS" |

+ sed -E 's/one/1/g' |

+ sed -E 's/two/2/g' |

+ sed -E 's/three/3/g' |

+ sed -E 's/four/4/g' |

+ sed -E 's/five/5/g' |

+ sed -E 's/six/6/g' |

+ sed -E 's/seven/7/g' |

+ sed -E 's/eight/8/g' |

+ sed -E 's/nine/9/g' |

+ sed -E 's/zero|oh/0/g' |

+ tr -d ' '

+)

+FORMATTED_NUMBER=$(echo "$DIGITS" | sed -E 's/^([0-9]{3})([0-9]{3})([0-9]{4})$/(\1) \2-\3/')

+echo "User's Transcribed Text:"

+echo "$NUMBER_WORDS"

+echo "User's Phone Number (Formatted):"

+echo "$FORMATTED_NUMBER"

+rm $TEMP_WAV $TEMP_TXT

\ No newline at end of file

diff --git a/Lab 4/README.md b/Lab 4/README.md

index afbb46ed98..b75b080f2e 100644

--- a/Lab 4/README.md

+++ b/Lab 4/README.md

@@ -1,498 +1,168 @@

-# Ph-UI!!!

-

-

- Instructions for Students (Click to Expand)

-

- **Submission Cleanup Reminder:**

- - This README.md contains extra instructional text for guidance.

- - Before submitting, remove all instructional text and example prompts from this file.

- - You may delete these sections or use the toggle/hide feature in VS Code to collapse them for a cleaner look.

- - Your final submission should be neat, focused on your own work, and easy to read for grading.

-

- This helps ensure your README.md is clear, professional, and uniquely yours!

-

-

----

-

-## Lab 4 Deliverables

-

-### Part 1 (Week 1)

-**Submit the following for Part 1:**

-*️⃣ **A. Capacitive Sensing**

- - Photos/videos of your Twizzler (or other object) capacitive sensor setup

- - Code and terminal output showing touch detection

-

-*️⃣ **B. More Sensors**

- - Photos/videos of each sensor tested (light/proximity, rotary encoder, joystick, distance sensor)

- - Code and terminal output for each sensor

-

-*️⃣ **C. Physical Sensing Design**

- - 5 sketches of different ways to use your chosen sensor

- - Written reflection: questions raised, what to prototype

- - Pick one design to prototype and explain why

-

-*️⃣ **D. Display & Housing**

- - 5 sketches for display/button/knob positioning

- - Written reflection: questions raised, what to prototype

- - Pick one display design to integrate

- - Rationale for design

- - Photos/videos of your cardboard prototype

-

----

-

-### Part 2 (Week 2)

-**Submit the following for Part 2:**

-*️⃣ **E. Multi-Device Demo**

- - Code and video for your multi-input multi-output demo (e.g., chaining Qwiic buttons, servo, GPIO expander, etc.)

- - Reflection on interaction effects and chaining

-

-*️⃣ **F. Final Documentation**

- - Photos/videos of your final prototype

- - Written summary: what it looks like, works like, acts like

- - Reflection on what you learned and next steps

-

----

## Lab Overview

-**NAMES OF COLLABORATORS HERE**

-

-

-For lab this week, we focus both on sensing, to bring in new modes of input into your devices, as well as prototyping the physical look and feel of the device. You will think about the physical form the device needs to perform the sensing as well as present the display or feedback about what was sensed.

-

-## Part 1 Lab Preparation

-

-### Get the latest content:

-As always, pull updates from the class Interactive-Lab-Hub to both your Pi and your own GitHub repo. As we discussed in the class, there are 2 ways you can do so:

-

-

-Option 1: On the Pi, `cd` to your `Interactive-Lab-Hub`, pull the updates from upstream (class lab-hub) and push the updates back to your own GitHub repo. You will need the personal access token for this.

-```

-pi@ixe00:~$ cd Interactive-Lab-Hub

-pi@ixe00:~/Interactive-Lab-Hub $ git pull upstream Fall2025

-pi@ixe00:~/Interactive-Lab-Hub $ git add .

-pi@ixe00:~/Interactive-Lab-Hub $ git commit -m "get lab4 content"

-pi@ixe00:~/Interactive-Lab-Hub $ git push

-```

-

-Option 2: On your own GitHub repo, [create pull request](https://github.com/FAR-Lab/Developing-and-Designing-Interactive-Devices/blob/2021Fall/readings/Submitting%20Labs.md) to get updates from the class Interactive-Lab-Hub. After you have latest updates online, go on your Pi, `cd` to your `Interactive-Lab-Hub` and use `git pull` to get updates from your own GitHub repo.

-

-Option 3: (preferred) use the Github.com interface to update the changes.

-

-### Start brainstorming ideas by reading:

-

-* [What do prototypes prototype?](https://www.semanticscholar.org/paper/What-do-Prototypes-Prototype-Houde-Hill/30bc6125fab9d9b2d5854223aeea7900a218f149)

-* [Paper prototyping](https://www.uxpin.com/studio/blog/paper-prototyping-the-practical-beginners-guide/) is used by UX designers to quickly develop interface ideas and run them by people before any programming occurs.

-* [Cardboard prototypes](https://www.youtube.com/watch?v=k_9Q-KDSb9o) help interactive product designers to work through additional issues, like how big something should be, how it could be carried, where it would sit.

-* [Tips to Cut, Fold, Mold and Papier-Mache Cardboard](https://makezine.com/2016/04/21/working-with-cardboard-tips-cut-fold-mold-papier-mache/) from Make Magazine.

-* [Surprisingly complicated forms](https://www.pinterest.com/pin/50032245843343100/) can be built with paper, cardstock or cardboard. The most advanced and challenging prototypes to prototype with paper are [cardboard mechanisms](https://www.pinterest.com/helgangchin/paper-mechanisms/) which move and change.

-* [Dyson Vacuum Cardboard Prototypes](http://media.dyson.com/downloads/JDF/JDF_Prim_poster05.pdf)

-

-

-### Gathering materials for this lab:

-

-* Cardboard (start collecting those shipping boxes!)

-* Found objects and materials--like bananas and twigs.

-* Cutting board

-* Cutting tools

-* Markers

-

-

-(We do offer shared cutting board, cutting tools, and markers on the class cart during the lab, so do not worry if you don't have them!)

-

-## Deliverables \& Submission for Lab 4

-

-The deliverables for this lab are, writings, sketches, photos, and videos that show what your prototype:

-* "Looks like": shows how the device should look, feel, sit, weigh, etc.

-* "Works like": shows what the device can do.

-* "Acts like": shows how a person would interact with the device.

-

-For submission, the readme.md page for this lab should be edited to include the work you have done:

-* Upload any materials that explain what you did, into your lab 4 repository, and link them in your lab 4 readme.md.

-* Link your Lab 4 readme.md in your main Interactive-Lab-Hub readme.md.

-* Labs are due on Mondays, make sure to submit your Lab 4 readme.md to Canvas.

-

-

-## Lab Overview

-

-A) [Capacitive Sensing](#part-a)

-

-B) [OLED screen](#part-b)

-

-C) [Paper Display](#part-c)

-

-D) [Materiality](#part-d)

-

-E) [Servo Control](#part-e)

-

-F) [Record the interaction](#part-f)

-

-

-## The Report (Part 1: A-D, Part 2: E-F)

-

-### Quick Start: Python Environment Setup

-

-1. **Create and activate a virtual environment in Lab 4:**

- ```bash

- cd ~/Interactive-Lab-Hub/Lab\ 4

- python3 -m venv .venv

- source .venv/bin/activate

- ```

-2. **Install all Lab 4 requirements:**

- ```bash

- pip install -r requirements2025.txt

- ```

-3. **Check CircuitPython Blinka installation:**

- ```bash

- python blinkatest.py

- ```

- If you see "Hello blinka!", your setup is correct. If not, follow the troubleshooting steps in the file or ask for help.

+Sachin Jojode, Nikhil Gangaram, Arya Prasad, Jaspreet Singh

### Part A

### Capacitive Sensing, a.k.a. Human-Twizzler Interaction

-We want to introduce you to the [capacitive sensor](https://learn.adafruit.com/adafruit-mpr121-gator) in your kit. It's one of the most flexible input devices we are able to provide. At boot, it measures the capacitance on each of the 12 contacts. Whenever that capacitance changes, it considers it a user touch. You can attach any conductive material. In your kit, you have copper tape that will work well, but don't limit yourself! In the example below, we use Twizzlers--you should pick your own objects.

-

-

-

- -

-

-

-

-

-Plug in the capacitive sensor board with the QWIIC connector. Connect your Twizzlers with either the copper tape or the alligator clips (the clips work better). Install the latest requirements from your working virtual environment:

-

-These Twizzlers are connected to pads 6 and 10. When you run the code and touch a Twizzler, the terminal will print out the following

+Twizzler Video Link: https://youtu.be/UR29FbM2_Zg

-```

-(circuitpython) pi@ixe00:~/Interactive-Lab-Hub/Lab 4 $ python cap_test.py

-Twizzler 10 touched!

-Twizzler 6 touched!

-```

### Part B

### More sensors

-#### Light/Proximity/Gesture sensor (APDS-9960)

-

-We here want you to get to know this awesome sensor [Adafruit APDS-9960](https://www.adafruit.com/product/3595). It is capable of sensing proximity, light (also RGB), and gesture!

-

- -

-

-Connect it to your pi with Qwiic connector and try running the three example scripts individually to see what the sensor is capable of doing!

-

-```

-(circuitpython) pi@ixe00:~/Interactive-Lab-Hub/Lab 4 $ python proximity_test.py

-...

-(circuitpython) pi@ixe00:~/Interactive-Lab-Hub/Lab 4 $ python gesture_test.py

-...

-(circuitpython) pi@ixe00:~/Interactive-Lab-Hub/Lab 4 $ python color_test.py

-...

-```

-

-You can go the the [Adafruit GitHub Page](https://github.com/adafruit/Adafruit_CircuitPython_APDS9960) to see more examples for this sensor!

-

-#### Rotary Encoder

-

-A rotary encoder is an electro-mechanical device that converts the angular position to analog or digital output signals. The [Adafruit rotary encoder](https://www.adafruit.com/product/4991#technical-details) we ordered for you came with separate breakout board and encoder itself, that is, they will need to be soldered if you have not yet done so! We will be bringing the soldering station to the lab class for you to use, also, you can go to the MakerLAB to do the soldering off-class. Here is some [guidance on soldering](https://learn.adafruit.com/adafruit-guide-excellent-soldering/preparation) from Adafruit. When you first solder, get someone who has done it before (ideally in the MakerLAB environment). It is a good idea to review this material beforehand so you know what to look at.

-

-

-

-

-Connect it to your pi with Qwiic connector and try running the three example scripts individually to see what the sensor is capable of doing!

-

-```

-(circuitpython) pi@ixe00:~/Interactive-Lab-Hub/Lab 4 $ python proximity_test.py

-...

-(circuitpython) pi@ixe00:~/Interactive-Lab-Hub/Lab 4 $ python gesture_test.py

-...

-(circuitpython) pi@ixe00:~/Interactive-Lab-Hub/Lab 4 $ python color_test.py

-...

-```

-

-You can go the the [Adafruit GitHub Page](https://github.com/adafruit/Adafruit_CircuitPython_APDS9960) to see more examples for this sensor!

-

-#### Rotary Encoder

-

-A rotary encoder is an electro-mechanical device that converts the angular position to analog or digital output signals. The [Adafruit rotary encoder](https://www.adafruit.com/product/4991#technical-details) we ordered for you came with separate breakout board and encoder itself, that is, they will need to be soldered if you have not yet done so! We will be bringing the soldering station to the lab class for you to use, also, you can go to the MakerLAB to do the soldering off-class. Here is some [guidance on soldering](https://learn.adafruit.com/adafruit-guide-excellent-soldering/preparation) from Adafruit. When you first solder, get someone who has done it before (ideally in the MakerLAB environment). It is a good idea to review this material beforehand so you know what to look at.

-

-

-

-

- -

- -

-

-

-Connect it to your pi with Qwiic connector and try running the example script, it comes with an additional button which might be useful for your design!

-

-```

-(circuitpython) pi@ixe00:~/Interactive-Lab-Hub/Lab 4 $ python encoder_test.py

-```

-

-You can go to the [Adafruit Learn Page](https://learn.adafruit.com/adafruit-i2c-qt-rotary-encoder/python-circuitpython) to learn more about the sensor! The sensor actually comes with an LED (neo pixel): Can you try lighting it up?

-

-#### Joystick

-

-

-A [joystick](https://www.sparkfun.com/products/15168) can be used to sense and report the input of the stick for it pivoting angle or direction. It also comes with a button input!

-

-

- -

-

-

-Connect it to your pi with Qwiic connector and try running the example script to see what it can do!

-

-```

-(circuitpython) pi@ixe00:~/Interactive-Lab-Hub/Lab 4 $ python joystick_test.py

-```

-

-You can go to the [SparkFun GitHub Page](https://github.com/sparkfun/Qwiic_Joystick_Py) to learn more about the sensor!

-

-#### Distance Sensor

-

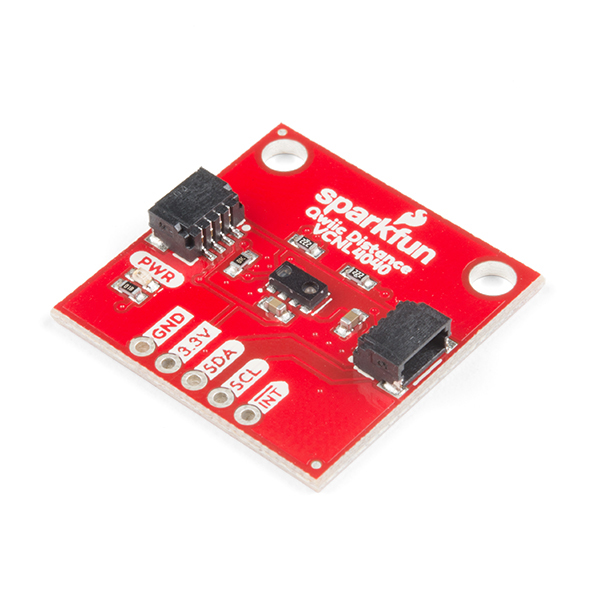

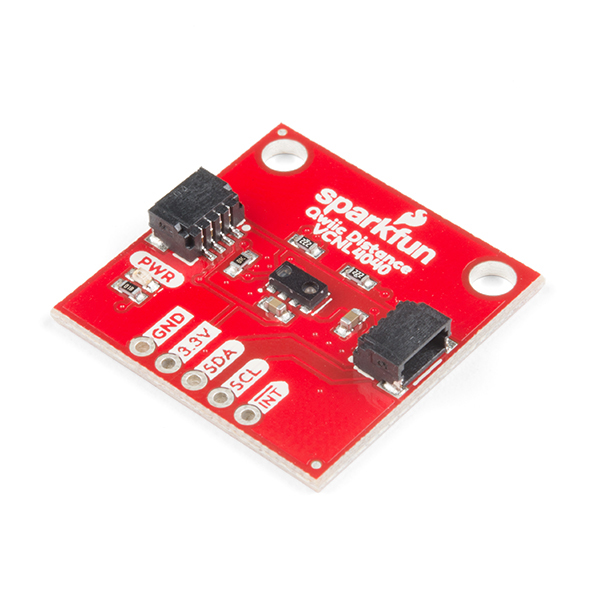

+Light/Proximity/Gesture sensor (APDS-9960)

+Link: https://youtu.be/EVjcOtlsp9w

-Earlier we have asked you to play with the proximity sensor, which is able to sense objects within a short distance. Here, we offer [Sparkfun Proximity Sensor Breakout](https://www.sparkfun.com/products/15177), With the ability to detect objects up to 20cm away.

+Rotary Encoder

+Link: https://youtu.be/T9menfbH3-I

-

- +Joystick

+Link: https://youtu.be/TCmgt5xkJVs

-

+Joystick

+Link: https://youtu.be/TCmgt5xkJVs

-

-

-Connect it to your pi with Qwiic connector and try running the example script to see how it works!

-

-```

-(circuitpython) pi@ixe00:~/Interactive-Lab-Hub/Lab 4 $ python qwiic_distance.py

-```

-

-You can go to the [SparkFun GitHub Page](https://github.com/sparkfun/Qwiic_Proximity_Py) to learn more about the sensor and see other examples

+Distance Sensor

+Link: https://youtu.be/fr77xgzWXX8

### Part C

### Physical considerations for sensing

+The AstroClicker is an interactive device that guides users through the night sky. The joystick serves as the primary input, allowing users to select celestial objects and control their viewing distance.

+ -Usually, sensors need to be positioned in specific locations or orientations to make them useful for their application. Now that you've tried a bunch of the sensors, pick one that you would like to use, and an application where you use the output of that sensor for an interaction. For example, you can use a distance sensor to measure someone's height if you position it overhead and get them to stand under it.

-

-

-**\*\*\*Draw 5 sketches of different ways you might use your sensor, and how the larger device needs to be shaped in order to make the sensor useful.\*\*\***

-

-**\*\*\*What are some things these sketches raise as questions? What do you need to physically prototype to understand how to anwer those questions?\*\*\***

-

-**\*\*\*Pick one of these designs to prototype.\*\*\***

-

-

-### Part D

-### Physical considerations for displaying information and housing parts

-

-

-

-Here is a Pi with a paper faceplate on it to turn it into a display interface:

-

-

-

-Usually, sensors need to be positioned in specific locations or orientations to make them useful for their application. Now that you've tried a bunch of the sensors, pick one that you would like to use, and an application where you use the output of that sensor for an interaction. For example, you can use a distance sensor to measure someone's height if you position it overhead and get them to stand under it.

-

-

-**\*\*\*Draw 5 sketches of different ways you might use your sensor, and how the larger device needs to be shaped in order to make the sensor useful.\*\*\***

-

-**\*\*\*What are some things these sketches raise as questions? What do you need to physically prototype to understand how to anwer those questions?\*\*\***

-

-**\*\*\*Pick one of these designs to prototype.\*\*\***

-

-

-### Part D

-### Physical considerations for displaying information and housing parts

-

-

-

-Here is a Pi with a paper faceplate on it to turn it into a display interface:

-

-

- -

-

-This is fine, but the mounting of the display constrains the display location and orientation a lot. Also, it really only works for applications where people can come and stand over the Pi, or where you can mount the Pi to the wall.

-

-Here is another prototype for a paper display:

-

-

-

-

-This is fine, but the mounting of the display constrains the display location and orientation a lot. Also, it really only works for applications where people can come and stand over the Pi, or where you can mount the Pi to the wall.

-

-Here is another prototype for a paper display:

-

- -

-

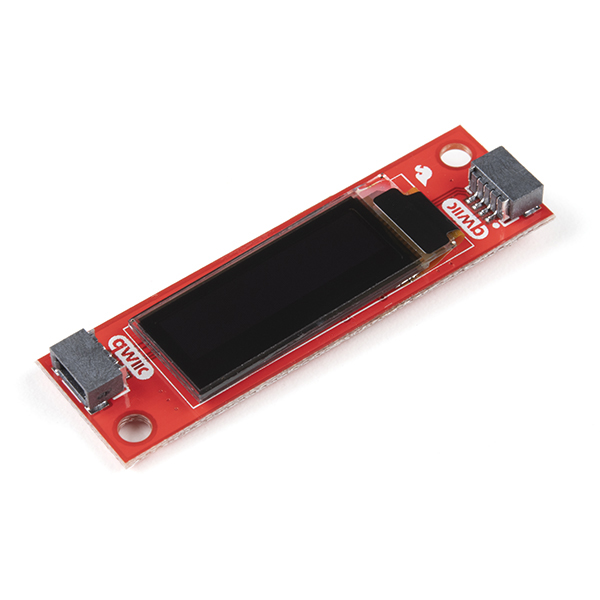

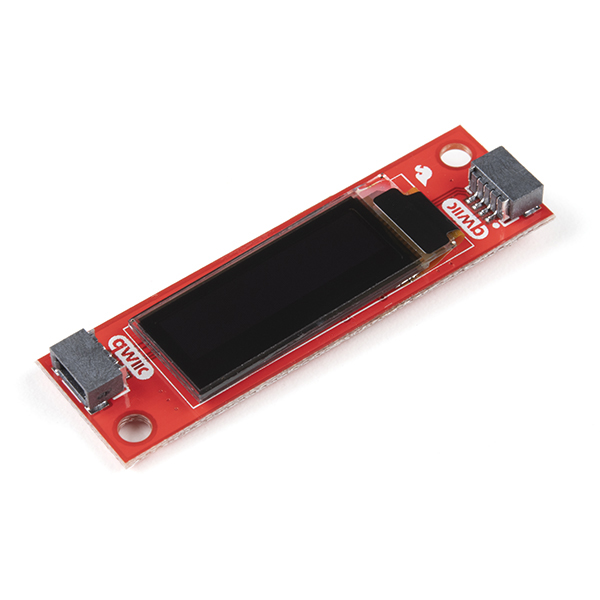

-Your kit includes these [SparkFun Qwiic OLED screens](https://www.sparkfun.com/products/17153). These use less power than the MiniTFTs you have mounted on the GPIO pins of the Pi, but, more importantly, they can be more flexibly mounted elsewhere on your physical interface. The way you program this display is almost identical to the way you program a Pi display. Take a look at `oled_test.py` and some more of the [Adafruit examples](https://github.com/adafruit/Adafruit_CircuitPython_SSD1306/tree/master/examples).

-

-

-

-

-Your kit includes these [SparkFun Qwiic OLED screens](https://www.sparkfun.com/products/17153). These use less power than the MiniTFTs you have mounted on the GPIO pins of the Pi, but, more importantly, they can be more flexibly mounted elsewhere on your physical interface. The way you program this display is almost identical to the way you program a Pi display. Take a look at `oled_test.py` and some more of the [Adafruit examples](https://github.com/adafruit/Adafruit_CircuitPython_SSD1306/tree/master/examples).

-

-

- -

-

-

-

-

-

-It holds a Pi and usb power supply, and provides a front stage on which to put writing, graphics, LEDs, buttons or displays.

-

-This design can be made by scoring a long strip of corrugated cardboard of width X, with the following measurements:

+Our next concept, the City Explorer, is a device that assists users in exploring new cities and uncovering hidden spots in places they already know. Using the joystick, users can select their next destination, and the device automatically records their travel history.

+ -| Y height of box

-| Y height of box

- thickness of cardboard | Z depth of box

- thickness of cardboard | Y height of box | Z depth of box | H height of faceplate

* * * * * (don't make this too short) * * * * *|

-| --- | --- | --- | --- | --- |

+Our next concept, Remote Play, is designed to let users engage with their pets remotely. The device integrates a joystick input with a gyroscopic ball that responds to the user’s movements and commands.

+ -Fold the first flap of the strip so that it sits flush against the back of the face plate, and tape, velcro or hot glue it in place. This will make a H x X interface, with a box of Z x X footprint (which you can adapt to the things you want to put in the box) and a height Y in the back.

+Our next concept, Flashcards, takes inspiration from platforms like Anki that use flashcards to support learning. This version introduces a joystick-based input system, creating a more interactive and engaging study experience.

+

-Fold the first flap of the strip so that it sits flush against the back of the face plate, and tape, velcro or hot glue it in place. This will make a H x X interface, with a box of Z x X footprint (which you can adapt to the things you want to put in the box) and a height Y in the back.

+Our next concept, Flashcards, takes inspiration from platforms like Anki that use flashcards to support learning. This version introduces a joystick-based input system, creating a more interactive and engaging study experience.

+ -Here is an example:

+Our final concept is the Store Navigator: a device designed to help users find their way through complex grocery store aisles. It comes preloaded with the store’s layout, allowing users to locate aisles and check if their desired items are in stock.

+

-Here is an example:

+Our final concept is the Store Navigator: a device designed to help users find their way through complex grocery store aisles. It comes preloaded with the store’s layout, allowing users to locate aisles and check if their desired items are in stock.

+ -

- +Some key questions that emerged from these sketches include:

-Think about how you want to present the information about what your sensor is sensing! Design a paper display for your project that communicates the state of the Pi and a sensor. Ideally you should design it so that you can slide the Pi out to work on the circuit or programming, and then slide it back in and reattach a few wires to be back in operation.

-

-**\*\*\*Sketch 5 designs for how you would physically position your display and any buttons or knobs needed to interact with it.\*\*\***

+- What problem does the device most effectively solve for users, and how can we clearly communicate that value?

+- How can we refine the physical form and interface to make interactions feel natural and satisfying?

+- What sensory feedback (visual, auditory, or haptic) could enhance the user’s sense of connection with the device?

+- How can the technology within the device be optimized for accuracy, responsiveness, and durability in real-world conditions?

+- In what ways can the overall experience be personalized to different types of users or environments?

-**\*\*\*What are some things these sketches raise as questions? What do you need to physically prototype to understand how to anwer those questions?\*\*\***

+After evaluating all the ideas, we’ve chosen to continue developing the **AstroClicker**.

-**\*\*\*Pick one of these display designs to integrate into your prototype.\*\*\***

-**\*\*\*Explain the rationale for the design.\*\*\*** (e.g. Does it need to be a certain size or form or need to be able to be seen from a certain distance?)

+### Part D

+### Physical considerations for displaying information and housing parts

-Build a cardboard prototype of your design.

+Astroclicker Designs:

+

+Some key questions that emerged from these sketches include:

-Think about how you want to present the information about what your sensor is sensing! Design a paper display for your project that communicates the state of the Pi and a sensor. Ideally you should design it so that you can slide the Pi out to work on the circuit or programming, and then slide it back in and reattach a few wires to be back in operation.

-

-**\*\*\*Sketch 5 designs for how you would physically position your display and any buttons or knobs needed to interact with it.\*\*\***

+- What problem does the device most effectively solve for users, and how can we clearly communicate that value?

+- How can we refine the physical form and interface to make interactions feel natural and satisfying?

+- What sensory feedback (visual, auditory, or haptic) could enhance the user’s sense of connection with the device?

+- How can the technology within the device be optimized for accuracy, responsiveness, and durability in real-world conditions?

+- In what ways can the overall experience be personalized to different types of users or environments?

-**\*\*\*What are some things these sketches raise as questions? What do you need to physically prototype to understand how to anwer those questions?\*\*\***

+After evaluating all the ideas, we’ve chosen to continue developing the **AstroClicker**.

-**\*\*\*Pick one of these display designs to integrate into your prototype.\*\*\***

-**\*\*\*Explain the rationale for the design.\*\*\*** (e.g. Does it need to be a certain size or form or need to be able to be seen from a certain distance?)

+### Part D

+### Physical considerations for displaying information and housing parts

-Build a cardboard prototype of your design.

+Astroclicker Designs:

+ +

+ +

+ +

+ +

+ +For our first design, which we based on Prototype 1, we focused on making the device comfortable and practical. Since it’s handheld, we placed the joystick in a spot that feels natural to use. We made sure the speaker faces the user so the sound doesn’t get muffled. We also planned space for ventilation to keep the Raspberry Pi from overheating, along with room for a battery. When building our cardboard prototype, we included these ideas using an Altoids can as a placeholder for the battery and adding a top cutout for airflow around the Raspberry Pi.

-**\*\*\*Document your rough prototype.\*\*\***

+Astroclicker Prototype Video Link: https://youtube.com/shorts/sQySwPO-nW0?feature=share

# LAB PART 2

+** Refer to Nikhil Gangaram's Interactive Lab Hub for the code **

+https://github.com/NikhilGangaram/NG-Interactive-Lab-Hub/tree/Fall2025/Lab%204

### Part 2

Following exploration and reflection from Part 1, complete the "looks like," "works like" and "acts like" prototypes for your design, reiterated below.

-

-

### Part E

-#### Chaining Devices and Exploring Interaction Effects

-

-For Part 2, you will design and build a fun interactive prototype using multiple inputs and outputs. This means chaining Qwiic and STEMMA QT devices (e.g., buttons, encoders, sensors, servos, displays) and/or combining with traditional breadboard prototyping (e.g., LEDs, buzzers, etc.).

-

-**Your prototype should:**

-- Combine at least two different types of input and output devices, inspired by your physical considerations from Part 1.

-- Be playful, creative, and demonstrate multi-input/multi-output interaction.

-

-**Document your system with:**

-- Code for your multi-device demo

-- Photos and/or video of the working prototype in action

-- A simple interaction diagram or sketch showing how inputs and outputs are connected and interact

-- Written reflection: What did you learn about multi-input/multi-output interaction? What was fun, surprising, or challenging?

-

-**Questions to consider:**

-- What new types of interaction become possible when you combine two or more sensors or actuators?

-- How does the physical arrangement of devices (e.g., where the encoder or sensor is placed) change the user experience?

-- What happens if you use one device to control or modulate another (e.g., encoder sets a threshold, sensor triggers an action)?

-- How does the system feel if you swap which device is "primary" and which is "secondary"?

-

-Try chaining different combinations and document what you discover!

-

-See encoder_accel_servo_dashboard.py in the Lab 4 folder for an example of chaining together three devices.

-

-**`Lab 4/encoder_accel_servo_dashboard.py`**

-

-#### Using Multiple Qwiic Buttons: Changing I2C Address (Physically & Digitally)

-

-If you want to use more than one Qwiic Button in your project, you must give each button a unique I2C address. There are two ways to do this:

+Software:

-##### 1. Physically: Soldering Address Jumpers

+* We began prototyping the AstroClicker software, located in the astro_clicker_demo.py file.

+* Our main design goal was to make the program user-friendly and intuitive, while avoiding an overwhelming or restrictive experience.

+* After multiple rounds of prototyping and refinement, we finalized the following code structure:

-On the back of the Qwiic Button, you'll find four solder jumpers labeled A0, A1, A2, and A3. By bridging these with solder, you change the I2C address. Only one button on the chain can use the default address (0x6F).

+1. Initialization and Data Structure

-**Address Table:**

+* Imports essential libraries for hardware interaction, timing, subprocess execution, and argument parsing.

+* The speak_text function handles text-to-speech using the external espeak program and logs all outputs to the console, regardless of the current OUTPUT_MODE (speaker or silent).

-| A3 | A2 | A1 | A0 | Address (hex) |

-|----|----|----|----|---------------|

-| 0 | 0 | 0 | 0 | 0x6F |

-| 0 | 0 | 0 | 1 | 0x6E |

-| 0 | 0 | 1 | 0 | 0x6D |

-| 0 | 0 | 1 | 1 | 0x6C |

-| 0 | 1 | 0 | 0 | 0x6B |

-| 0 | 1 | 0 | 1 | 0x6A |

-| 0 | 1 | 1 | 0 | 0x69 |

-| 0 | 1 | 1 | 1 | 0x68 |

-| 1 | 0 | 0 | 0 | 0x67 |

-| ...| ...| ...| ... | ... |

+Celestial data is divided into three layers based on their distance from Earth:

-For example, if you solder A0 closed (leave A1, A2, A3 open), the address becomes 0x6E.

+* Layer 0 (Closest): CONSTELLATION_DATA

+* Layer 1 (Intermediate): SOLAR_SYSTEM_DATA

+* Layer 2 (Farthest): DEEP_SKY_DATA

-**Soldering Tips:**

-- Use a small amount of solder to bridge the pads for the jumper you want to close.

-- Only one jumper needs to be closed for each address change (see table above).

-- Power cycle the button after changing the jumper.

+------------------------------------------------------------------------

-##### 2. Digitally: Using Software to Change Address

+2. The SkyNavigator State Machine

-You can also change the address in software (temporarily or permanently) using the example script `qwiic_button_ex6_changeI2CAddress.py` in the Lab 4 folder. This is useful if you want to reassign addresses without soldering.

+* The SkyNavigator class manages the user’s state, tracking:

+* The layer_index (starting at 1, representing the Solar System).

+* Which objects have been viewed, using a list called unseen_targets.

-Run the script and follow the prompts:

-```bash

-python qwiic_button_ex6_changeI2CAddress.py

-```

-Enter the new address (e.g., 5B for 0x5B) when prompted. Power cycle the button after changing the address.

+The _set_new_target() method:

+* Randomly selects an available object from the current layer.

+* Resets that layer’s availability once all objects have been viewed.

+* The move(direction) method updates the state based on joystick input:

+* ‘up’ / ‘down’: Adjusts layer_index to zoom in or out, switching between the three celestial layers.

+* ‘left’ / ‘right’: Keeps the user within the current layer and selects a new random target.

+* Built-in boundary checks prevent movement beyond Layer 0 or Layer 2.

+* After every successful movement, the new location or target is announced using the speak_text function.

-**Note:** The software method is less foolproof and you need to make sure to keep track of which button has which address!

+------------------------------------------------------------------------

-##### Using Multiple Buttons in Code

+3.

+* The runExample function initializes the joystick and the SkyNavigator.

+* A welcome message and the initial target's details are spoken aloud.

+* An infinite while loop continuously reads the joystick's horizontal (x_val), vertical (y_val), and button state. It uses a **debounce timer** (MOVE_DEBOUNCE_TIME) to prevent rapid, accidental inputs.

-After setting unique addresses, you can use multiple buttons in your script. See these example scripts in the Lab 4 folder:

+| Input Action | Resulting Action | Output/Narration |

+| :--- | :--- | :--- |

+| **Joystick Button Click (Release)** | Stays at current target. | Reads the **`name`** and **`fact`** of the current target, followed by a prompt for the next action. |

+| **Joystick Up** (Y-Value > 600) | Calls `navigator.move('up')` (Zoom Out/Farther). | Announces the zoom-out and the new target's name/type, or a boundary message. |

+| **Joystick Down** (Y-Value < 400) | Calls `navigator.move('down')` (Zoom In/Closer). | Announces the zoom-in and the new target's name/type, or a boundary message. |

+| **Joystick Left** (X-Value > 600) | Calls `navigator.move('left')` (Scan/New Target). | Announces a scan left and the new target's name/type. |

+| **Joystick Right** (X-Value < 400) | Calls `navigator.move('right')` (Scan/New Target). | Announces a scan right and the new target's name/type. |

-- **`qwiic_1_button.py`**: Basic example for reading a single Qwiic Button (default address 0x6F). Run with:

- ```bash

- python qwiic_1_button.py

- ```

+------------------------------------------------------------------------

-- **`qwiic_button_led_demo.py`**: Demonstrates using two Qwiic Buttons at different addresses (e.g., 0x6F and 0x6E) and controlling their LEDs. Button 1 toggles its own LED; Button 2 toggles both LEDs. Run with:

- ```bash

- python qwiic_button_led_demo.py

- ```

+4.

+* The main() function uses the argparse module to allow the user to optionally specify the output mode (mode speaker or mode silent) when running the script.

+* The program can be cleanly exited by pressing **Ctrl+C**.

-Here is a minimal code example for two buttons:

-```python

-import qwiic_button

+

+For our first design, which we based on Prototype 1, we focused on making the device comfortable and practical. Since it’s handheld, we placed the joystick in a spot that feels natural to use. We made sure the speaker faces the user so the sound doesn’t get muffled. We also planned space for ventilation to keep the Raspberry Pi from overheating, along with room for a battery. When building our cardboard prototype, we included these ideas using an Altoids can as a placeholder for the battery and adding a top cutout for airflow around the Raspberry Pi.

-**\*\*\*Document your rough prototype.\*\*\***

+Astroclicker Prototype Video Link: https://youtube.com/shorts/sQySwPO-nW0?feature=share

# LAB PART 2

+** Refer to Nikhil Gangaram's Interactive Lab Hub for the code **

+https://github.com/NikhilGangaram/NG-Interactive-Lab-Hub/tree/Fall2025/Lab%204

### Part 2

Following exploration and reflection from Part 1, complete the "looks like," "works like" and "acts like" prototypes for your design, reiterated below.

-

-

### Part E

-#### Chaining Devices and Exploring Interaction Effects

-

-For Part 2, you will design and build a fun interactive prototype using multiple inputs and outputs. This means chaining Qwiic and STEMMA QT devices (e.g., buttons, encoders, sensors, servos, displays) and/or combining with traditional breadboard prototyping (e.g., LEDs, buzzers, etc.).

-

-**Your prototype should:**

-- Combine at least two different types of input and output devices, inspired by your physical considerations from Part 1.

-- Be playful, creative, and demonstrate multi-input/multi-output interaction.

-

-**Document your system with:**

-- Code for your multi-device demo

-- Photos and/or video of the working prototype in action

-- A simple interaction diagram or sketch showing how inputs and outputs are connected and interact

-- Written reflection: What did you learn about multi-input/multi-output interaction? What was fun, surprising, or challenging?

-

-**Questions to consider:**

-- What new types of interaction become possible when you combine two or more sensors or actuators?

-- How does the physical arrangement of devices (e.g., where the encoder or sensor is placed) change the user experience?

-- What happens if you use one device to control or modulate another (e.g., encoder sets a threshold, sensor triggers an action)?

-- How does the system feel if you swap which device is "primary" and which is "secondary"?

-

-Try chaining different combinations and document what you discover!

-

-See encoder_accel_servo_dashboard.py in the Lab 4 folder for an example of chaining together three devices.

-

-**`Lab 4/encoder_accel_servo_dashboard.py`**

-

-#### Using Multiple Qwiic Buttons: Changing I2C Address (Physically & Digitally)

-

-If you want to use more than one Qwiic Button in your project, you must give each button a unique I2C address. There are two ways to do this:

+Software:

-##### 1. Physically: Soldering Address Jumpers

+* We began prototyping the AstroClicker software, located in the astro_clicker_demo.py file.

+* Our main design goal was to make the program user-friendly and intuitive, while avoiding an overwhelming or restrictive experience.

+* After multiple rounds of prototyping and refinement, we finalized the following code structure:

-On the back of the Qwiic Button, you'll find four solder jumpers labeled A0, A1, A2, and A3. By bridging these with solder, you change the I2C address. Only one button on the chain can use the default address (0x6F).

+1. Initialization and Data Structure

-**Address Table:**

+* Imports essential libraries for hardware interaction, timing, subprocess execution, and argument parsing.

+* The speak_text function handles text-to-speech using the external espeak program and logs all outputs to the console, regardless of the current OUTPUT_MODE (speaker or silent).

-| A3 | A2 | A1 | A0 | Address (hex) |

-|----|----|----|----|---------------|

-| 0 | 0 | 0 | 0 | 0x6F |

-| 0 | 0 | 0 | 1 | 0x6E |

-| 0 | 0 | 1 | 0 | 0x6D |

-| 0 | 0 | 1 | 1 | 0x6C |

-| 0 | 1 | 0 | 0 | 0x6B |

-| 0 | 1 | 0 | 1 | 0x6A |

-| 0 | 1 | 1 | 0 | 0x69 |

-| 0 | 1 | 1 | 1 | 0x68 |

-| 1 | 0 | 0 | 0 | 0x67 |

-| ...| ...| ...| ... | ... |

+Celestial data is divided into three layers based on their distance from Earth:

-For example, if you solder A0 closed (leave A1, A2, A3 open), the address becomes 0x6E.

+* Layer 0 (Closest): CONSTELLATION_DATA

+* Layer 1 (Intermediate): SOLAR_SYSTEM_DATA

+* Layer 2 (Farthest): DEEP_SKY_DATA

-**Soldering Tips:**

-- Use a small amount of solder to bridge the pads for the jumper you want to close.

-- Only one jumper needs to be closed for each address change (see table above).

-- Power cycle the button after changing the jumper.

+------------------------------------------------------------------------

-##### 2. Digitally: Using Software to Change Address

+2. The SkyNavigator State Machine

-You can also change the address in software (temporarily or permanently) using the example script `qwiic_button_ex6_changeI2CAddress.py` in the Lab 4 folder. This is useful if you want to reassign addresses without soldering.

+* The SkyNavigator class manages the user’s state, tracking:

+* The layer_index (starting at 1, representing the Solar System).

+* Which objects have been viewed, using a list called unseen_targets.

-Run the script and follow the prompts:

-```bash

-python qwiic_button_ex6_changeI2CAddress.py

-```

-Enter the new address (e.g., 5B for 0x5B) when prompted. Power cycle the button after changing the address.

+The _set_new_target() method:

+* Randomly selects an available object from the current layer.

+* Resets that layer’s availability once all objects have been viewed.

+* The move(direction) method updates the state based on joystick input:

+* ‘up’ / ‘down’: Adjusts layer_index to zoom in or out, switching between the three celestial layers.

+* ‘left’ / ‘right’: Keeps the user within the current layer and selects a new random target.

+* Built-in boundary checks prevent movement beyond Layer 0 or Layer 2.

+* After every successful movement, the new location or target is announced using the speak_text function.

-**Note:** The software method is less foolproof and you need to make sure to keep track of which button has which address!

+------------------------------------------------------------------------

-##### Using Multiple Buttons in Code

+3.

+* The runExample function initializes the joystick and the SkyNavigator.

+* A welcome message and the initial target's details are spoken aloud.

+* An infinite while loop continuously reads the joystick's horizontal (x_val), vertical (y_val), and button state. It uses a **debounce timer** (MOVE_DEBOUNCE_TIME) to prevent rapid, accidental inputs.

-After setting unique addresses, you can use multiple buttons in your script. See these example scripts in the Lab 4 folder:

+| Input Action | Resulting Action | Output/Narration |

+| :--- | :--- | :--- |

+| **Joystick Button Click (Release)** | Stays at current target. | Reads the **`name`** and **`fact`** of the current target, followed by a prompt for the next action. |

+| **Joystick Up** (Y-Value > 600) | Calls `navigator.move('up')` (Zoom Out/Farther). | Announces the zoom-out and the new target's name/type, or a boundary message. |

+| **Joystick Down** (Y-Value < 400) | Calls `navigator.move('down')` (Zoom In/Closer). | Announces the zoom-in and the new target's name/type, or a boundary message. |

+| **Joystick Left** (X-Value > 600) | Calls `navigator.move('left')` (Scan/New Target). | Announces a scan left and the new target's name/type. |

+| **Joystick Right** (X-Value < 400) | Calls `navigator.move('right')` (Scan/New Target). | Announces a scan right and the new target's name/type. |

-- **`qwiic_1_button.py`**: Basic example for reading a single Qwiic Button (default address 0x6F). Run with:

- ```bash

- python qwiic_1_button.py

- ```

+------------------------------------------------------------------------

-- **`qwiic_button_led_demo.py`**: Demonstrates using two Qwiic Buttons at different addresses (e.g., 0x6F and 0x6E) and controlling their LEDs. Button 1 toggles its own LED; Button 2 toggles both LEDs. Run with:

- ```bash

- python qwiic_button_led_demo.py

- ```

+4.

+* The main() function uses the argparse module to allow the user to optionally specify the output mode (mode speaker or mode silent) when running the script.

+* The program can be cleanly exited by pressing **Ctrl+C**.

-Here is a minimal code example for two buttons:

-```python

-import qwiic_button

+ -# Default button (0x6F)

-button1 = qwiic_button.QwiicButton()

-# Button with A0 soldered (0x6E)

-button2 = qwiic_button.QwiicButton(0x6E)

+Hardware

-button1.begin()

-button2.begin()

+* We began developing the hardware prototype, focusing on the following main considerations:

+* The device should be handheld, with the joystick positioned in an ergonomic spot for comfortable use.

+* The speaker should face the user to ensure clear audio output and prevent muffled sound.

+* The Raspberry Pi should have proper ventilation to avoid overheating, along with space for a battery to power the system.

-while True:

- if button1.is_button_pressed():

- print("Button 1 pressed!")

- if button2.is_button_pressed():

- print("Button 2 pressed!")

-```

+Most of these considerations were carried over from the cardboard prototype, though we made several design adjustments to improve ergonomics and overall usability.

-For more details, see the [Qwiic Button Hookup Guide](https://learn.sparkfun.com/tutorials/qwiic-button-hookup-guide/all#i2c-address).

+Below are images of the updated hardware prototype.

----

+

-# Default button (0x6F)

-button1 = qwiic_button.QwiicButton()

-# Button with A0 soldered (0x6E)

-button2 = qwiic_button.QwiicButton(0x6E)

+Hardware

-button1.begin()

-button2.begin()

+* We began developing the hardware prototype, focusing on the following main considerations:

+* The device should be handheld, with the joystick positioned in an ergonomic spot for comfortable use.

+* The speaker should face the user to ensure clear audio output and prevent muffled sound.

+* The Raspberry Pi should have proper ventilation to avoid overheating, along with space for a battery to power the system.

-while True:

- if button1.is_button_pressed():

- print("Button 1 pressed!")

- if button2.is_button_pressed():

- print("Button 2 pressed!")

-```

+Most of these considerations were carried over from the cardboard prototype, though we made several design adjustments to improve ergonomics and overall usability.

-For more details, see the [Qwiic Button Hookup Guide](https://learn.sparkfun.com/tutorials/qwiic-button-hookup-guide/all#i2c-address).

+Below are images of the updated hardware prototype.

----

+ +

+ +

+ -### PCF8574 GPIO Expander: Add More Pins Over I²C

-Sometimes your Pi’s header GPIO pins are already full (e.g., with a display or HAT). That’s where an I²C GPIO expander comes in handy.

-

-We use the Adafruit PCF8574 I²C GPIO Expander, which gives you 8 extra digital pins over I²C. It’s a great way to prototype with LEDs, buttons, or other components on the breadboard without worrying about pin conflicts—similar to how Arduino users often expand their pinouts when prototyping physical interactions.

-

-**Why is this useful?**

-- You only need two wires (I²C: SDA + SCL) to unlock 8 extra GPIOs.

-- It integrates smoothly with CircuitPython and Blinka.

-- It allows a clean prototyping workflow when the Pi’s 40-pin header is already occupied by displays, HATs, or sensors.

-- Makes breadboard setups feel more like an Arduino-style prototyping environment where it’s easy to wire up interaction elements.

-

-**Demo Script:** `Lab 4/gpio_expander.py`

-

-

-### PCF8574 GPIO Expander: Add More Pins Over I²C

-Sometimes your Pi’s header GPIO pins are already full (e.g., with a display or HAT). That’s where an I²C GPIO expander comes in handy.

-

-We use the Adafruit PCF8574 I²C GPIO Expander, which gives you 8 extra digital pins over I²C. It’s a great way to prototype with LEDs, buttons, or other components on the breadboard without worrying about pin conflicts—similar to how Arduino users often expand their pinouts when prototyping physical interactions.

-

-**Why is this useful?**

-- You only need two wires (I²C: SDA + SCL) to unlock 8 extra GPIOs.

-- It integrates smoothly with CircuitPython and Blinka.

-- It allows a clean prototyping workflow when the Pi’s 40-pin header is already occupied by displays, HATs, or sensors.

-- Makes breadboard setups feel more like an Arduino-style prototyping environment where it’s easy to wire up interaction elements.

-

-**Demo Script:** `Lab 4/gpio_expander.py`

-

-

-  -

-

-

-We connected 8 LEDs (through 220 Ω resistors) to the expander and ran a little light show. The script cycles through three patterns:

-- Chase (one LED at a time, left to right)

-- Knight Rider (back-and-forth sweep)

-- Disco (random blink chaos)

-

-Every few runs, the script swaps to the next pattern automatically:

-```bash

-python gpio_expander.py

-```

-

-This is a playful way to visualize how the expander works, but the same technique applies if you wanted to prototype buttons, switches, or other interaction elements. It’s a lightweight, flexible addition to your prototyping toolkit.

-

----

-

-### Servo Control with SparkFun Servo pHAT

-For this lab, you will use the **SparkFun Servo pHAT** to control a micro servo (such as the Miuzei MS18 or similar 9g servo). The Servo pHAT stacks directly on top of the Adafruit Mini PiTFT (135×240) display without pin conflicts:

-- The Mini PiTFT uses SPI (GPIO22, 23, 24, 25) for display and buttons ([SPI pinout](https://pinout.xyz/pinout/spi)).

-- The Servo pHAT uses I²C (GPIO2 & 3) for the PCA9685 servo driver ([I2C pinout](https://pinout.xyz/pinout/i2c)).

-- Since SPI and I²C are separate buses, you can use both boards together.

-**⚡ Power:**

-- Plug a USB-C cable into the Servo pHAT to provide enough current for the servos. The Pi itself should still be powered by its own USB-C supply. Do NOT power servos from the Pi’s 5V rail.

-

-

-  -

-

-

-**Basic Python Example:**

-We provide a simple example script: `Lab 4/pi_servo_hat_test.py` (requires the `pi_servo_hat` Python package).

-Run the example:

-```

-python pi_servo_hat_test.py

-```