+

+

+

+# Prep for Part 2

+Done!

-The Raspberry Pi 4 has a variety of interfacing options. When you plug the pi in the red power LED turns on. Any time the SD card is accessed the green LED flashes. It has standard USB ports and HDMI ports. Less familiar it has a set of 20x2 pin headers that allow you to connect a various peripherals.

+---

-

+# Prep for Part 2

+Done!

-The Raspberry Pi 4 has a variety of interfacing options. When you plug the pi in the red power LED turns on. Any time the SD card is accessed the green LED flashes. It has standard USB ports and HDMI ports. Less familiar it has a set of 20x2 pin headers that allow you to connect a various peripherals.

+---

- +# Lab 2 Part 2

-To learn more about any individual pin and what it is for go to [pinout.xyz](https://pinout.xyz/pinout/3v3_power) and click on the pin. Some terms may be unfamiliar but we will go over the relevant ones as they come up.

+## Modify the barebones clock to make it your own

-### Hardware (you have already done this in the prep)

+I created `screen_clock_vlm.py` based on `screen_clock.py` to for our **VLT pipeline**. Instead of just printing system time, the script captures an image via connected webcam, passes it to the local VLM, and shows both the **predicted “AI time”** and the **real time** side by side.

-From your kit take out the display and the [Raspberry Pi 5](https://www.google.com/url?sa=i&url=https%3A%2F%2Fwww.raspberrypi.com%2Fproducts%2Fraspberry-pi-5%2F&psig=AOvVaw330s4wIQWfHou2Vk3-0jUN&ust=1757611779758000&source=images&cd=vfe&opi=89978449&ved=0CBMQjRxqFwoTCPi1-5_czo8DFQAAAAAdAAAAABAE)

+=======

+## Assignment that was formerly Lab 2 Part E.

+### Modify the barebones clock to make it your own

-Line up the screen and press it on the headers. The hole in the screen should match up with the hole on the raspberry pi.

+Does time have to be linear? How do you measure a year? [In daylights? In midnights? In cups of coffee?](https://www.youtube.com/watch?v=wsj15wPpjLY)

-

+# Lab 2 Part 2

-To learn more about any individual pin and what it is for go to [pinout.xyz](https://pinout.xyz/pinout/3v3_power) and click on the pin. Some terms may be unfamiliar but we will go over the relevant ones as they come up.

+## Modify the barebones clock to make it your own

-### Hardware (you have already done this in the prep)

+I created `screen_clock_vlm.py` based on `screen_clock.py` to for our **VLT pipeline**. Instead of just printing system time, the script captures an image via connected webcam, passes it to the local VLM, and shows both the **predicted “AI time”** and the **real time** side by side.

-From your kit take out the display and the [Raspberry Pi 5](https://www.google.com/url?sa=i&url=https%3A%2F%2Fwww.raspberrypi.com%2Fproducts%2Fraspberry-pi-5%2F&psig=AOvVaw330s4wIQWfHou2Vk3-0jUN&ust=1757611779758000&source=images&cd=vfe&opi=89978449&ved=0CBMQjRxqFwoTCPi1-5_czo8DFQAAAAAdAAAAABAE)

+=======

+## Assignment that was formerly Lab 2 Part E.

+### Modify the barebones clock to make it your own

-Line up the screen and press it on the headers. The hole in the screen should match up with the hole on the raspberry pi.

+Does time have to be linear? How do you measure a year? [In daylights? In midnights? In cups of coffee?](https://www.youtube.com/watch?v=wsj15wPpjLY)

-

- -

- -

-

+ +

+

+

+

+

+

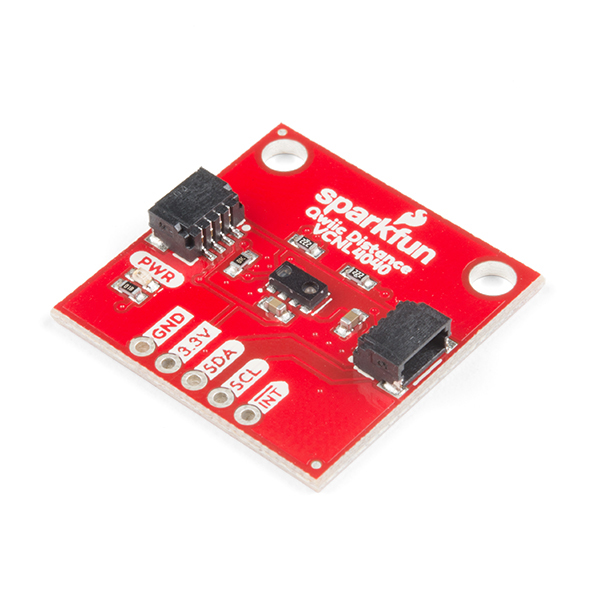

+Connect it to your pi with Qwiic connector and try running the three example scripts individually to see what the sensor is capable of doing!

+

+```

+(circuitpython) pi@ixe00:~/Interactive-Lab-Hub/Lab 4 $ python proximity_test.py

+...

+(circuitpython) pi@ixe00:~/Interactive-Lab-Hub/Lab 4 $ python gesture_test.py

+...

+(circuitpython) pi@ixe00:~/Interactive-Lab-Hub/Lab 4 $ python color_test.py

+...

+```

+

+You can go the the [Adafruit GitHub Page](https://github.com/adafruit/Adafruit_CircuitPython_APDS9960) to see more examples for this sensor!

+

+#### Rotary Encoder

+

+A rotary encoder is an electro-mechanical device that converts the angular position to analog or digital output signals. The [Adafruit rotary encoder](https://www.adafruit.com/product/4991#technical-details) we ordered for you came with separate breakout board and encoder itself, that is, they will need to be soldered if you have not yet done so! We will be bringing the soldering station to the lab class for you to use, also, you can go to the MakerLAB to do the soldering off-class. Here is some [guidance on soldering](https://learn.adafruit.com/adafruit-guide-excellent-soldering/preparation) from Adafruit. When you first solder, get someone who has done it before (ideally in the MakerLAB environment). It is a good idea to review this material beforehand so you know what to look at.

+

+

+

+

+Connect it to your pi with Qwiic connector and try running the three example scripts individually to see what the sensor is capable of doing!

+

+```

+(circuitpython) pi@ixe00:~/Interactive-Lab-Hub/Lab 4 $ python proximity_test.py

+...

+(circuitpython) pi@ixe00:~/Interactive-Lab-Hub/Lab 4 $ python gesture_test.py

+...

+(circuitpython) pi@ixe00:~/Interactive-Lab-Hub/Lab 4 $ python color_test.py

+...

+```

+

+You can go the the [Adafruit GitHub Page](https://github.com/adafruit/Adafruit_CircuitPython_APDS9960) to see more examples for this sensor!

+

+#### Rotary Encoder

+

+A rotary encoder is an electro-mechanical device that converts the angular position to analog or digital output signals. The [Adafruit rotary encoder](https://www.adafruit.com/product/4991#technical-details) we ordered for you came with separate breakout board and encoder itself, that is, they will need to be soldered if you have not yet done so! We will be bringing the soldering station to the lab class for you to use, also, you can go to the MakerLAB to do the soldering off-class. Here is some [guidance on soldering](https://learn.adafruit.com/adafruit-guide-excellent-soldering/preparation) from Adafruit. When you first solder, get someone who has done it before (ideally in the MakerLAB environment). It is a good idea to review this material beforehand so you know what to look at.

+

+

+

+

+ +

+ +

+

+ +

+

+ +

+

+

+

+

+

+This is fine, but the mounting of the display constrains the display location and orientation a lot. Also, it really only works for applications where people can come and stand over the Pi, or where you can mount the Pi to the wall.

+

+Here is another prototype for a paper display:

+

+

+

+

+This is fine, but the mounting of the display constrains the display location and orientation a lot. Also, it really only works for applications where people can come and stand over the Pi, or where you can mount the Pi to the wall.

+

+Here is another prototype for a paper display:

+

+ +

+

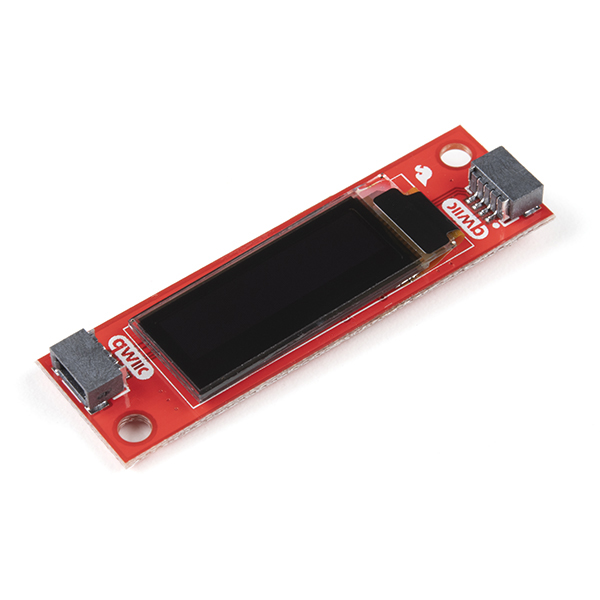

+Your kit includes these [SparkFun Qwiic OLED screens](https://www.sparkfun.com/products/17153). These use less power than the MiniTFTs you have mounted on the GPIO pins of the Pi, but, more importantly, they can be more flexibly mounted elsewhere on your physical interface. The way you program this display is almost identical to the way you program a Pi display. Take a look at `oled_test.py` and some more of the [Adafruit examples](https://github.com/adafruit/Adafruit_CircuitPython_SSD1306/tree/master/examples).

+

+

+

+

+Your kit includes these [SparkFun Qwiic OLED screens](https://www.sparkfun.com/products/17153). These use less power than the MiniTFTs you have mounted on the GPIO pins of the Pi, but, more importantly, they can be more flexibly mounted elsewhere on your physical interface. The way you program this display is almost identical to the way you program a Pi display. Take a look at `oled_test.py` and some more of the [Adafruit examples](https://github.com/adafruit/Adafruit_CircuitPython_SSD1306/tree/master/examples).

+

+

+ +

+

+

+

+

+Think about how you want to present the information about what your sensor is sensing! Design a paper display for your project that communicates the state of the Pi and a sensor. Ideally you should design it so that you can slide the Pi out to work on the circuit or programming, and then slide it back in and reattach a few wires to be back in operation.

+

+**\*\*\*Sketch 5 designs for how you would physically position your display and any buttons or knobs needed to interact with it.\*\*\***

+

+**\*\*\*What are some things these sketches raise as questions? What do you need to physically prototype to understand how to anwer those questions?\*\*\***

+

+**\*\*\*Pick one of these display designs to integrate into your prototype.\*\*\***

+

+**\*\*\*Explain the rationale for the design.\*\*\*** (e.g. Does it need to be a certain size or form or need to be able to be seen from a certain distance?)

+

+Build a cardboard prototype of your design.

+

+

+**\*\*\*Document your rough prototype.\*\*\***

+

+

+# LAB PART 2

+

+### Part 2

+

+Following exploration and reflection from Part 1, complete the "looks like," "works like" and "acts like" prototypes for your design, reiterated below.

+

+

+

+### Part E

+

+#### Chaining Devices and Exploring Interaction Effects

+

+For Part 2, you will design and build a fun interactive prototype using multiple inputs and outputs. This means chaining Qwiic and STEMMA QT devices (e.g., buttons, encoders, sensors, servos, displays) and/or combining with traditional breadboard prototyping (e.g., LEDs, buzzers, etc.).

+

+**Your prototype should:**

+- Combine at least two different types of input and output devices, inspired by your physical considerations from Part 1.

+- Be playful, creative, and demonstrate multi-input/multi-output interaction.

+

+**Document your system with:**

+- Code for your multi-device demo

+- Photos and/or video of the working prototype in action

+- A simple interaction diagram or sketch showing how inputs and outputs are connected and interact

+- Written reflection: What did you learn about multi-input/multi-output interaction? What was fun, surprising, or challenging?

+

+**Questions to consider:**

+- What new types of interaction become possible when you combine two or more sensors or actuators?

+- How does the physical arrangement of devices (e.g., where the encoder or sensor is placed) change the user experience?

+- What happens if you use one device to control or modulate another (e.g., encoder sets a threshold, sensor triggers an action)?

+- How does the system feel if you swap which device is "primary" and which is "secondary"?

+

+Try chaining different combinations and document what you discover!

+

+See encoder_accel_servo_dashboard.py in the Lab 4 folder for an example of chaining together three devices.

+

+**`Lab 4/encoder_accel_servo_dashboard.py`**

+

+#### Using Multiple Qwiic Buttons: Changing I2C Address (Physically & Digitally)

+

+If you want to use more than one Qwiic Button in your project, you must give each button a unique I2C address. There are two ways to do this:

+

+##### 1. Physically: Soldering Address Jumpers

+

+On the back of the Qwiic Button, you'll find four solder jumpers labeled A0, A1, A2, and A3. By bridging these with solder, you change the I2C address. Only one button on the chain can use the default address (0x6F).

+

+**Address Table:**

+

+| A3 | A2 | A1 | A0 | Address (hex) |

+|----|----|----|----|---------------|

+| 0 | 0 | 0 | 0 | 0x6F |

+| 0 | 0 | 0 | 1 | 0x6E |

+| 0 | 0 | 1 | 0 | 0x6D |

+| 0 | 0 | 1 | 1 | 0x6C |

+| 0 | 1 | 0 | 0 | 0x6B |

+| 0 | 1 | 0 | 1 | 0x6A |

+| 0 | 1 | 1 | 0 | 0x69 |

+| 0 | 1 | 1 | 1 | 0x68 |

+| 1 | 0 | 0 | 0 | 0x67 |

+| ...| ...| ...| ... | ... |

+

+For example, if you solder A0 closed (leave A1, A2, A3 open), the address becomes 0x6E.

+

+**Soldering Tips:**

+- Use a small amount of solder to bridge the pads for the jumper you want to close.

+- Only one jumper needs to be closed for each address change (see table above).

+- Power cycle the button after changing the jumper.

+

+##### 2. Digitally: Using Software to Change Address

+

+You can also change the address in software (temporarily or permanently) using the example script `qwiic_button_ex6_changeI2CAddress.py` in the Lab 4 folder. This is useful if you want to reassign addresses without soldering.

+

+Run the script and follow the prompts:

+```bash

+python qwiic_button_ex6_changeI2CAddress.py

+```

+Enter the new address (e.g., 5B for 0x5B) when prompted. Power cycle the button after changing the address.

+

+**Note:** The software method is less foolproof and you need to make sure to keep track of which button has which address!

+

+

+##### Using Multiple Buttons in Code

+

+After setting unique addresses, you can use multiple buttons in your script. See these example scripts in the Lab 4 folder:

+

+- **`qwiic_1_button.py`**: Basic example for reading a single Qwiic Button (default address 0x6F). Run with:

+ ```bash

+ python qwiic_1_button.py

+ ```

+

+- **`qwiic_button_led_demo.py`**: Demonstrates using two Qwiic Buttons at different addresses (e.g., 0x6F and 0x6E) and controlling their LEDs. Button 1 toggles its own LED; Button 2 toggles both LEDs. Run with:

+ ```bash

+ python qwiic_button_led_demo.py

+ ```

+

+Here is a minimal code example for two buttons:

+```python

+import qwiic_button

+

+# Default button (0x6F)

+button1 = qwiic_button.QwiicButton()

+# Button with A0 soldered (0x6E)

+button2 = qwiic_button.QwiicButton(0x6E)

+

+button1.begin()

+button2.begin()

+

+while True:

+ if button1.is_button_pressed():

+ print("Button 1 pressed!")

+ if button2.is_button_pressed():

+ print("Button 2 pressed!")

+```

+

+For more details, see the [Qwiic Button Hookup Guide](https://learn.sparkfun.com/tutorials/qwiic-button-hookup-guide/all#i2c-address).

+

+---

+

+### PCF8574 GPIO Expander: Add More Pins Over I²C

+

+Sometimes your Pi’s header GPIO pins are already full (e.g., with a display or HAT). That’s where an I²C GPIO expander comes in handy.

+

+We use the Adafruit PCF8574 I²C GPIO Expander, which gives you 8 extra digital pins over I²C. It’s a great way to prototype with LEDs, buttons, or other components on the breadboard without worrying about pin conflicts—similar to how Arduino users often expand their pinouts when prototyping physical interactions.

+

+**Why is this useful?**

+- You only need two wires (I²C: SDA + SCL) to unlock 8 extra GPIOs.

+- It integrates smoothly with CircuitPython and Blinka.

+- It allows a clean prototyping workflow when the Pi’s 40-pin header is already occupied by displays, HATs, or sensors.

+- Makes breadboard setups feel more like an Arduino-style prototyping environment where it’s easy to wire up interaction elements.

+

+**Demo Script:** `Lab 4/gpio_expander.py`

+

+

+

+Think about how you want to present the information about what your sensor is sensing! Design a paper display for your project that communicates the state of the Pi and a sensor. Ideally you should design it so that you can slide the Pi out to work on the circuit or programming, and then slide it back in and reattach a few wires to be back in operation.

+

+**\*\*\*Sketch 5 designs for how you would physically position your display and any buttons or knobs needed to interact with it.\*\*\***

+

+**\*\*\*What are some things these sketches raise as questions? What do you need to physically prototype to understand how to anwer those questions?\*\*\***

+

+**\*\*\*Pick one of these display designs to integrate into your prototype.\*\*\***

+

+**\*\*\*Explain the rationale for the design.\*\*\*** (e.g. Does it need to be a certain size or form or need to be able to be seen from a certain distance?)

+

+Build a cardboard prototype of your design.

+

+

+**\*\*\*Document your rough prototype.\*\*\***

+

+

+# LAB PART 2

+

+### Part 2

+

+Following exploration and reflection from Part 1, complete the "looks like," "works like" and "acts like" prototypes for your design, reiterated below.

+

+

+

+### Part E

+

+#### Chaining Devices and Exploring Interaction Effects

+

+For Part 2, you will design and build a fun interactive prototype using multiple inputs and outputs. This means chaining Qwiic and STEMMA QT devices (e.g., buttons, encoders, sensors, servos, displays) and/or combining with traditional breadboard prototyping (e.g., LEDs, buzzers, etc.).

+

+**Your prototype should:**

+- Combine at least two different types of input and output devices, inspired by your physical considerations from Part 1.

+- Be playful, creative, and demonstrate multi-input/multi-output interaction.

+

+**Document your system with:**

+- Code for your multi-device demo

+- Photos and/or video of the working prototype in action

+- A simple interaction diagram or sketch showing how inputs and outputs are connected and interact

+- Written reflection: What did you learn about multi-input/multi-output interaction? What was fun, surprising, or challenging?

+

+**Questions to consider:**

+- What new types of interaction become possible when you combine two or more sensors or actuators?

+- How does the physical arrangement of devices (e.g., where the encoder or sensor is placed) change the user experience?

+- What happens if you use one device to control or modulate another (e.g., encoder sets a threshold, sensor triggers an action)?

+- How does the system feel if you swap which device is "primary" and which is "secondary"?

+

+Try chaining different combinations and document what you discover!

+

+See encoder_accel_servo_dashboard.py in the Lab 4 folder for an example of chaining together three devices.

+

+**`Lab 4/encoder_accel_servo_dashboard.py`**

+

+#### Using Multiple Qwiic Buttons: Changing I2C Address (Physically & Digitally)

+

+If you want to use more than one Qwiic Button in your project, you must give each button a unique I2C address. There are two ways to do this:

+

+##### 1. Physically: Soldering Address Jumpers

+

+On the back of the Qwiic Button, you'll find four solder jumpers labeled A0, A1, A2, and A3. By bridging these with solder, you change the I2C address. Only one button on the chain can use the default address (0x6F).

+

+**Address Table:**

+

+| A3 | A2 | A1 | A0 | Address (hex) |

+|----|----|----|----|---------------|

+| 0 | 0 | 0 | 0 | 0x6F |

+| 0 | 0 | 0 | 1 | 0x6E |

+| 0 | 0 | 1 | 0 | 0x6D |

+| 0 | 0 | 1 | 1 | 0x6C |

+| 0 | 1 | 0 | 0 | 0x6B |

+| 0 | 1 | 0 | 1 | 0x6A |

+| 0 | 1 | 1 | 0 | 0x69 |

+| 0 | 1 | 1 | 1 | 0x68 |

+| 1 | 0 | 0 | 0 | 0x67 |

+| ...| ...| ...| ... | ... |

+

+For example, if you solder A0 closed (leave A1, A2, A3 open), the address becomes 0x6E.

+

+**Soldering Tips:**

+- Use a small amount of solder to bridge the pads for the jumper you want to close.

+- Only one jumper needs to be closed for each address change (see table above).

+- Power cycle the button after changing the jumper.

+

+##### 2. Digitally: Using Software to Change Address

+

+You can also change the address in software (temporarily or permanently) using the example script `qwiic_button_ex6_changeI2CAddress.py` in the Lab 4 folder. This is useful if you want to reassign addresses without soldering.

+

+Run the script and follow the prompts:

+```bash

+python qwiic_button_ex6_changeI2CAddress.py

+```

+Enter the new address (e.g., 5B for 0x5B) when prompted. Power cycle the button after changing the address.

+

+**Note:** The software method is less foolproof and you need to make sure to keep track of which button has which address!

+

+

+##### Using Multiple Buttons in Code

+

+After setting unique addresses, you can use multiple buttons in your script. See these example scripts in the Lab 4 folder:

+

+- **`qwiic_1_button.py`**: Basic example for reading a single Qwiic Button (default address 0x6F). Run with:

+ ```bash

+ python qwiic_1_button.py

+ ```

+

+- **`qwiic_button_led_demo.py`**: Demonstrates using two Qwiic Buttons at different addresses (e.g., 0x6F and 0x6E) and controlling their LEDs. Button 1 toggles its own LED; Button 2 toggles both LEDs. Run with:

+ ```bash

+ python qwiic_button_led_demo.py

+ ```

+

+Here is a minimal code example for two buttons:

+```python

+import qwiic_button

+

+# Default button (0x6F)

+button1 = qwiic_button.QwiicButton()

+# Button with A0 soldered (0x6E)

+button2 = qwiic_button.QwiicButton(0x6E)

+

+button1.begin()

+button2.begin()

+

+while True:

+ if button1.is_button_pressed():

+ print("Button 1 pressed!")

+ if button2.is_button_pressed():

+ print("Button 2 pressed!")

+```

+

+For more details, see the [Qwiic Button Hookup Guide](https://learn.sparkfun.com/tutorials/qwiic-button-hookup-guide/all#i2c-address).

+

+---

+

+### PCF8574 GPIO Expander: Add More Pins Over I²C

+

+Sometimes your Pi’s header GPIO pins are already full (e.g., with a display or HAT). That’s where an I²C GPIO expander comes in handy.

+

+We use the Adafruit PCF8574 I²C GPIO Expander, which gives you 8 extra digital pins over I²C. It’s a great way to prototype with LEDs, buttons, or other components on the breadboard without worrying about pin conflicts—similar to how Arduino users often expand their pinouts when prototyping physical interactions.

+

+**Why is this useful?**

+- You only need two wires (I²C: SDA + SCL) to unlock 8 extra GPIOs.

+- It integrates smoothly with CircuitPython and Blinka.

+- It allows a clean prototyping workflow when the Pi’s 40-pin header is already occupied by displays, HATs, or sensors.

+- Makes breadboard setups feel more like an Arduino-style prototyping environment where it’s easy to wire up interaction elements.

+

+**Demo Script:** `Lab 4/gpio_expander.py`

+

+

+  +

+

+  +

+

|

+ |

+

+ |

+

|

+ |

+

+ |

+

loading…

+

+

+

+

+

+

+

+

+

+

+### Part B

+### Send and Receive on your Pi

+

+[sender.py](./sender.py) and and [reader.py](./reader.py) show you the basics of using the mqtt in python. Let's spend a few minutes running these and seeing how messages are transferred and shown up. Before working on your Pi, keep the connection of `farlab.infosci.cornell.edu/8883` with MQTT Explorer running on your laptop.

+

+**Running Examples on Pi**

+

+* Install the packages from `requirements.txt` under a virtual environment:

+

+ ```

+ pi@raspberrypi:~/Interactive-Lab-Hub $ source .venv/bin/activate

+ (circuitpython) pi@raspberrypi:~/Interactive-Lab-Hub $ cd Lab\ 6

+ (circuitpython) pi@raspberrypi:~/Interactive-Lab-Hub/Lab 6 $ pip install -r requirements.txt

+ ...

+ ```

+* Run `sender.py`, fill in a topic name (should start with `IDD/`), then start sending messages. You should be able to see them on MQTT Explorer.

+

+ ```

+ (circuitpython) pi@raspberrypi:~/Interactive-Lab-Hub/Lab 6 $ python sender.py

+ >> topic: IDD/AlexandraTesting

+ now writing to topic IDD/AlexandraTesting

+ type new-topic to swich topics

+ >> message: testtesttest

+ ...

+ ```

+* Run `reader.py`, and you should see any messages being published to `IDD/` subtopics. Type a message inside MQTT explorer and see if you can receive it with `reader.py`.

+

+ ```

+ (circuitpython) pi@raspberrypi:~ Interactive-Lab-Hub/Lab 6 $ python reader.py

+ ...

+ ```

+

+

+

+

+

+### Part B

+### Send and Receive on your Pi

+

+[sender.py](./sender.py) and and [reader.py](./reader.py) show you the basics of using the mqtt in python. Let's spend a few minutes running these and seeing how messages are transferred and shown up. Before working on your Pi, keep the connection of `farlab.infosci.cornell.edu/8883` with MQTT Explorer running on your laptop.

+

+**Running Examples on Pi**

+

+* Install the packages from `requirements.txt` under a virtual environment:

+

+ ```

+ pi@raspberrypi:~/Interactive-Lab-Hub $ source .venv/bin/activate

+ (circuitpython) pi@raspberrypi:~/Interactive-Lab-Hub $ cd Lab\ 6

+ (circuitpython) pi@raspberrypi:~/Interactive-Lab-Hub/Lab 6 $ pip install -r requirements.txt

+ ...

+ ```

+* Run `sender.py`, fill in a topic name (should start with `IDD/`), then start sending messages. You should be able to see them on MQTT Explorer.

+

+ ```

+ (circuitpython) pi@raspberrypi:~/Interactive-Lab-Hub/Lab 6 $ python sender.py

+ >> topic: IDD/AlexandraTesting

+ now writing to topic IDD/AlexandraTesting

+ type new-topic to swich topics

+ >> message: testtesttest

+ ...

+ ```

+* Run `reader.py`, and you should see any messages being published to `IDD/` subtopics. Type a message inside MQTT explorer and see if you can receive it with `reader.py`.

+

+ ```

+ (circuitpython) pi@raspberrypi:~ Interactive-Lab-Hub/Lab 6 $ python reader.py

+ ...

+ ```

+

+ +

+

+**\*\*\*Consider how you might use this messaging system on interactive devices, and draw/write down 5 ideas here.\*\*\***

+

+### Part C

+### Streaming a Sensor

+

+We have included an updated example from [lab 4](https://github.com/FAR-Lab/Interactive-Lab-Hub/tree/Fall2021/Lab%204) that streams the [capacitor sensor](https://learn.adafruit.com/adafruit-mpr121-gator) inputs over MQTT.

+

+Plug in the capacitive sensor board with the Qwiic connector. Use the alligator clips to connect a Twizzler (or any other things you used back in Lab 4) and run the example script:

+

+

+

+

+**\*\*\*Consider how you might use this messaging system on interactive devices, and draw/write down 5 ideas here.\*\*\***

+

+### Part C

+### Streaming a Sensor

+

+We have included an updated example from [lab 4](https://github.com/FAR-Lab/Interactive-Lab-Hub/tree/Fall2021/Lab%204) that streams the [capacitor sensor](https://learn.adafruit.com/adafruit-mpr121-gator) inputs over MQTT.

+

+Plug in the capacitive sensor board with the Qwiic connector. Use the alligator clips to connect a Twizzler (or any other things you used back in Lab 4) and run the example script:

+

+

+ +

+ +

+ +

+ +

+

+  +

+  +

+  +

+

Start a video session via the generation server, then open:

+http://localhost:7986/view/<uid>+

Example:

+http://localhost:7986/view/abc123def456+ +""" + + +@app.get("/view/{uid}", response_class=HTMLResponse) +def view(uid: str): + return f""" + +